Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

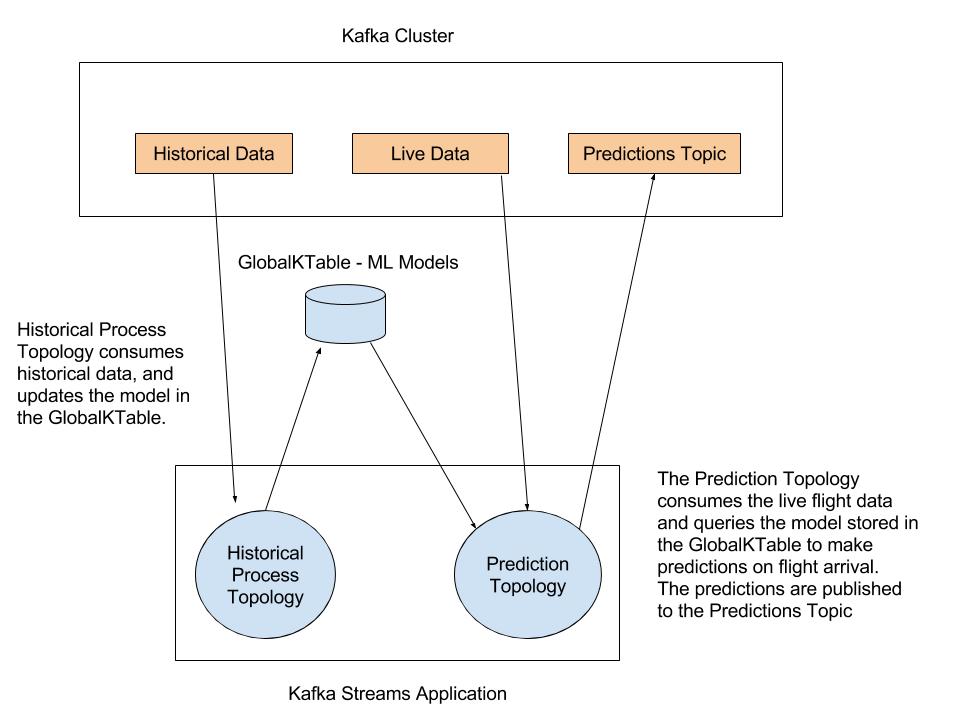

Predicting Flight Arrivals with the Apache Kafka Streams API

Kafka Streams makes it easy to write scalable, fault-tolerant, and real-time production apps and microservices. This post builds upon a previous post that covered scalable machine learning with Apache Kafka, […]

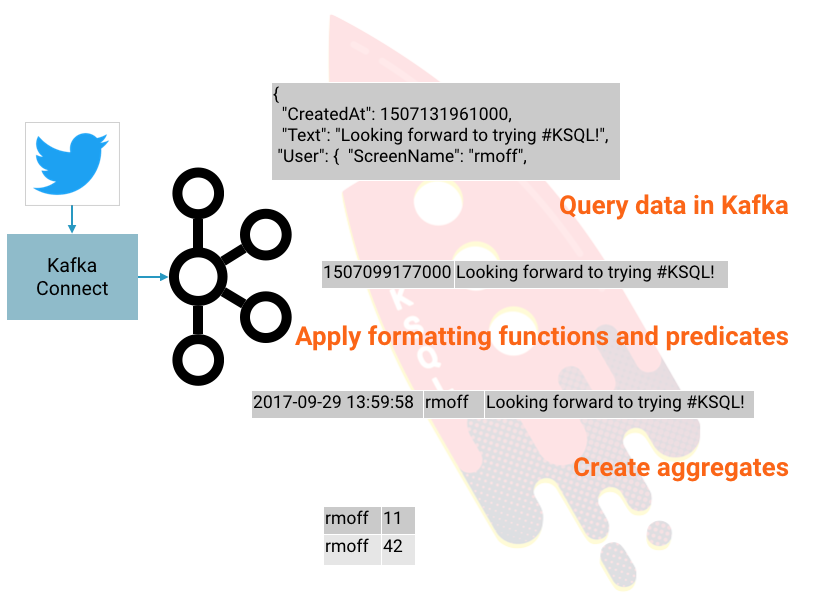

Getting Started Analyzing Twitter Data in Apache Kafka through KSQL

KSQL is the streaming SQL engine for Apache Kafka®. It lets you do sophisticated stream processing on Kafka topics, easily, using a simple and interactive SQL interface. In this short […]

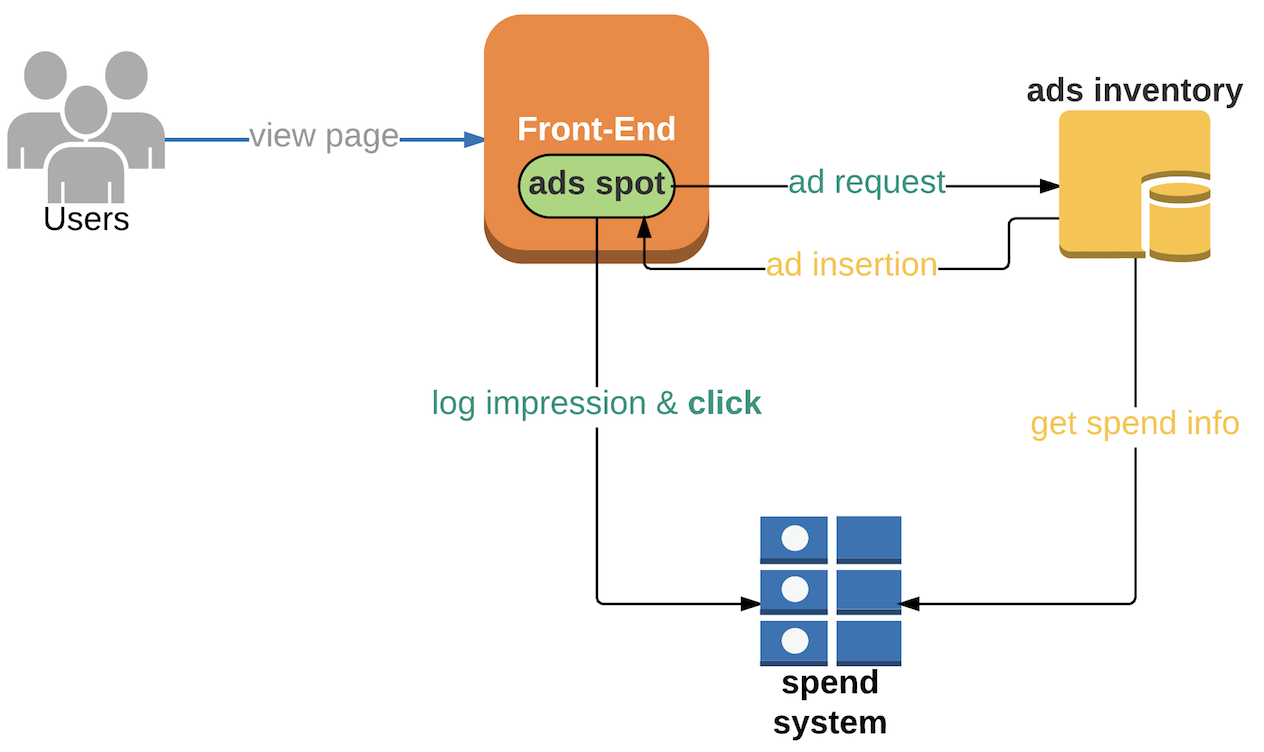

Using Kafka Streams API for Predictive Budgeting

At Pinterest, we use Kafka Streams API to provide inflight spend data to thousands of ads servers in mere seconds. Our ads engineering team works hard to ensure we’re providing […]

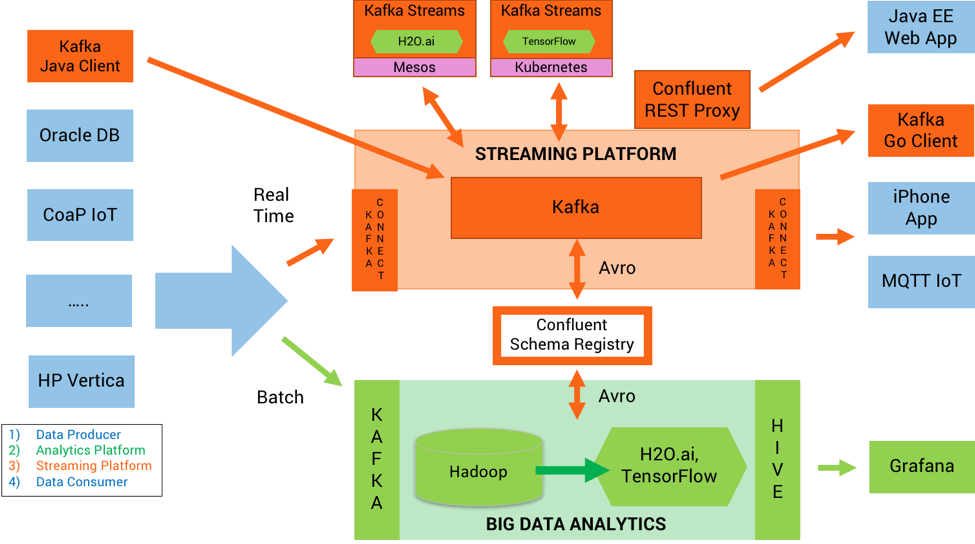

How to Build and Deploy Scalable Machine Learning in Production with Apache Kafka

Scalable Machine Learning in Production with Apache Kafka® Intelligent real time applications are a game changer in any industry. Machine learning and its sub-topic, deep learning, are gaining momentum because […]

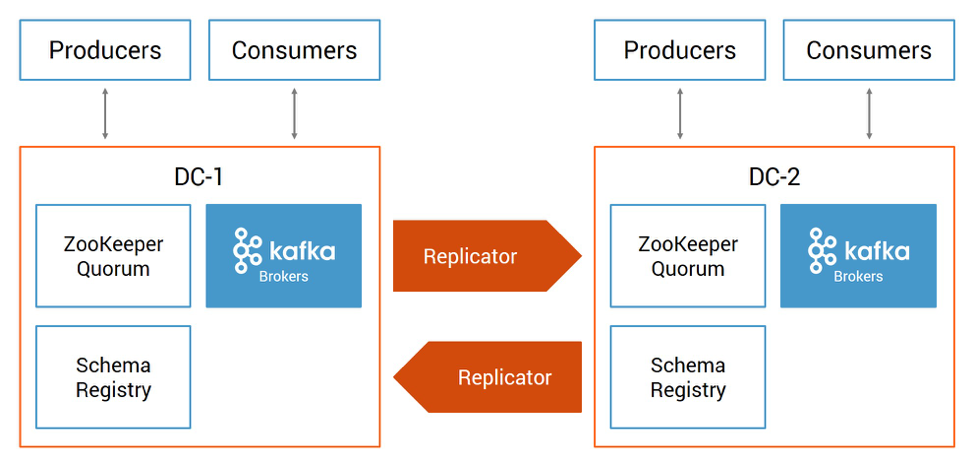

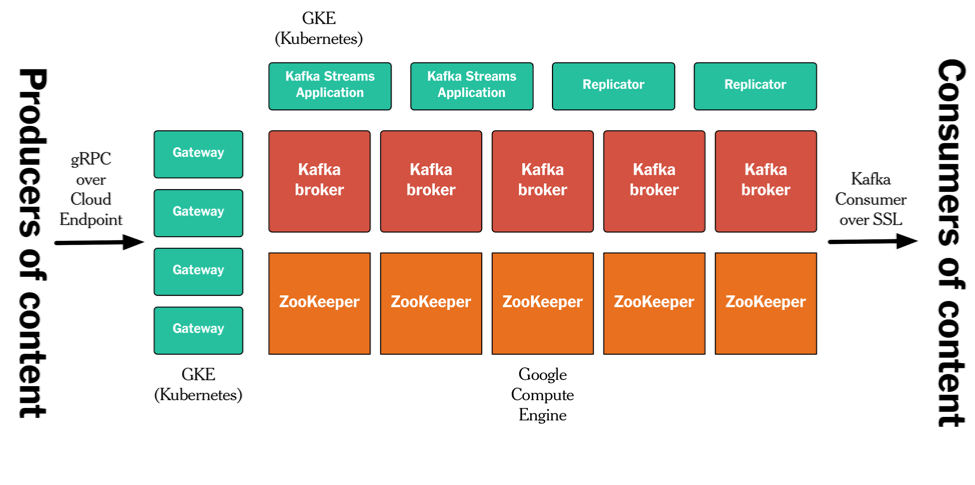

Disaster Recovery for Multi-Datacenter Apache Kafka Deployments

Datacenter downtime and data loss can result in businesses losing a vast amount of revenue or entirely halting operations. To minimize the downtime and data loss resulting from a disaster, […]

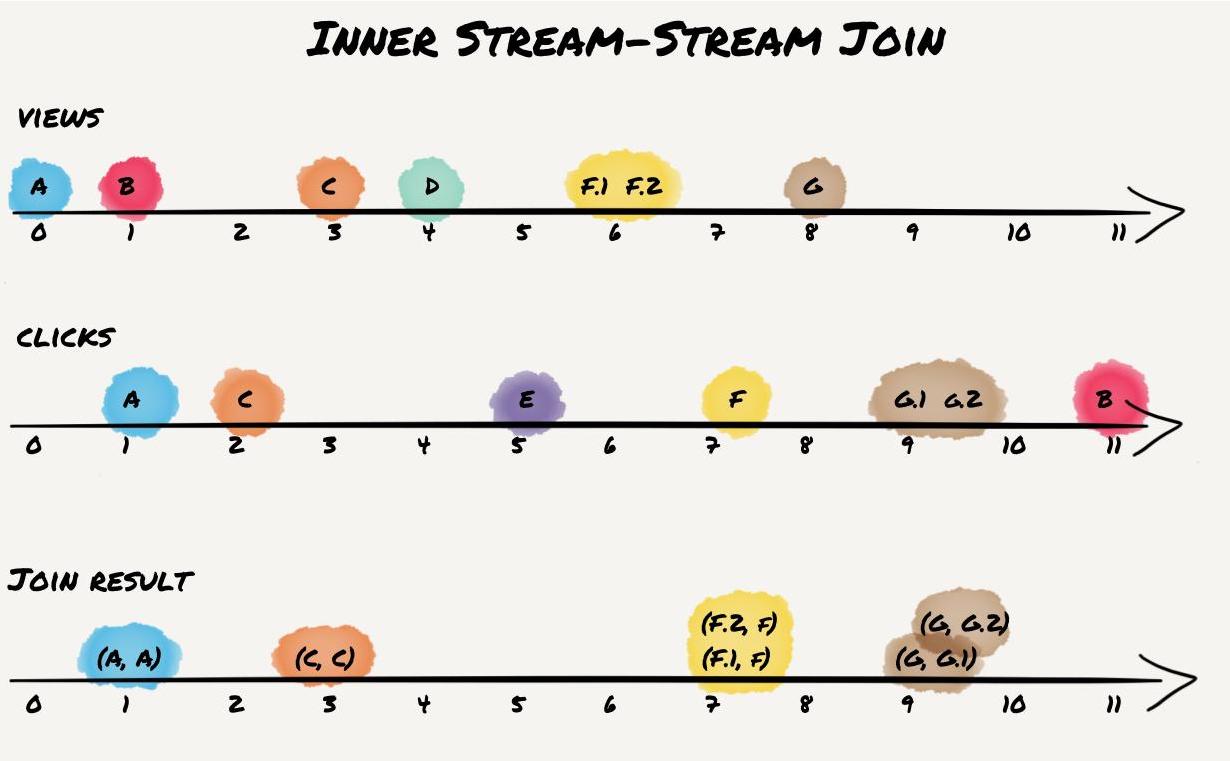

Crossing the Streams – Joins in Apache Kafka

This post was originally published at the Codecentric blog with a focus on “old” join semantics in Apache Kafka versions 0.10.0 and 0.10.1. Version 0.10.0 of the popular distributed streaming […]

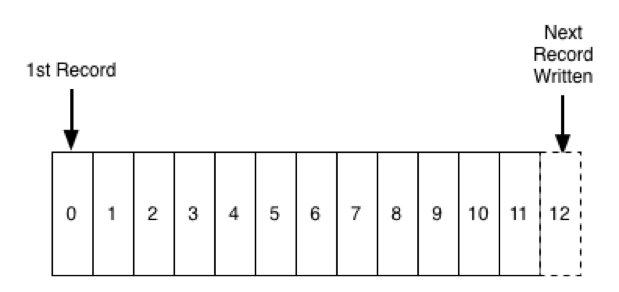

It's Okay To Store Data In Kafka

A question people often ask about Apache Kafka® is whether it is okay to use it for longer term storage. Kafka, as you might know, stores a log of records, […]

How Apache Kafka is Tested

Introduction Apache Kafka® is used in thousands of companies, including some of the most demanding, large scale, and critical systems in the world. Its largest users run Kafka across thousands […]

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 3

We saw in the earlier articles (part 1, part 2) in this series how to use the Kafka Connect API to build out a very simple, but powerful and scalable, streaming […]

Publishing with Apache Kafka at The New York Times

At The New York Times we have a number of different systems that are used for producing content. We have several Content Management Systems, and we use third-party data and […]

Introducing KSQL: Streaming SQL for Apache Kafka

Note ksqlDB is the successor to KSQL. Read the announcement to learn more. To get started with ksqlDB in Confluent Cloud, you can sign up for fully managed Apache Kafka […]

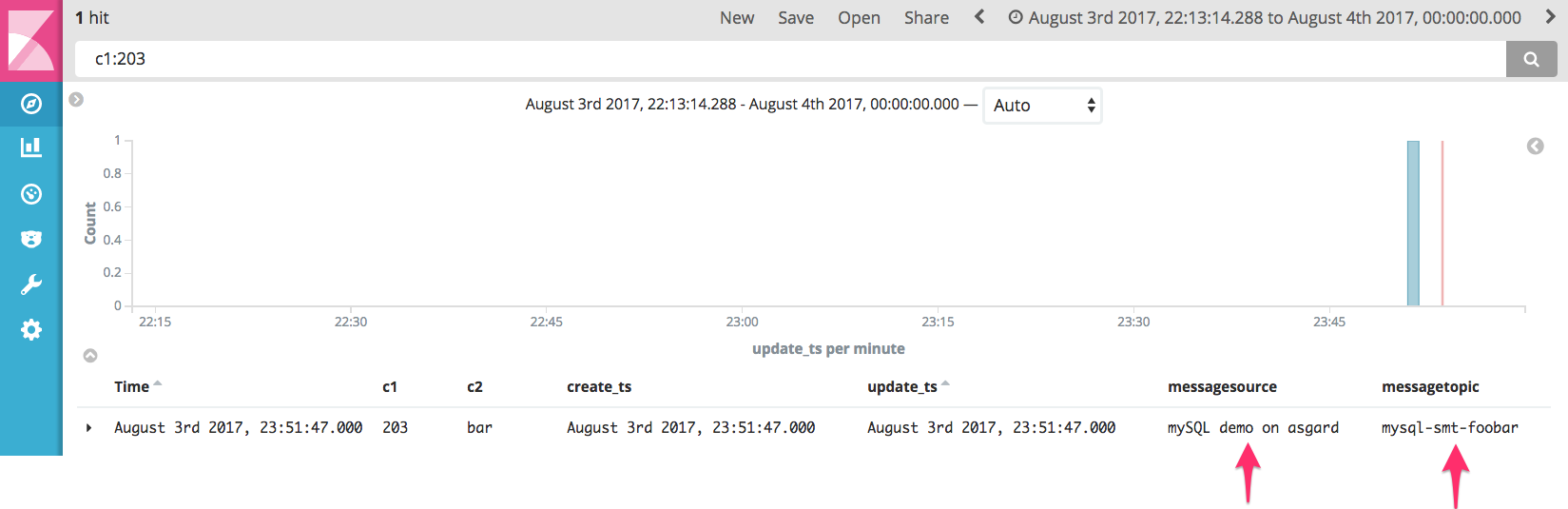

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 2

In the previous article in this blog series I showed how easy it is to stream data out of a database into Apache Kafka®, using the Kafka Connect API. I […]

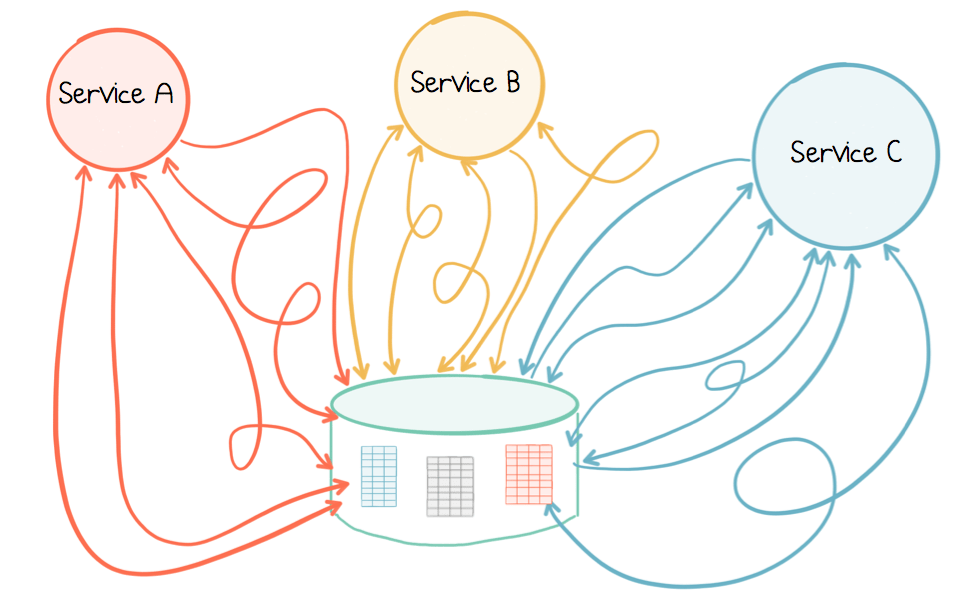

Leveraging the Power of a Database ‘Unbundled’

When you build microservices using Apache Kafka®, the log can be used as more than just a communication protocol. It can be used to store events: messaging that remembers. This […]

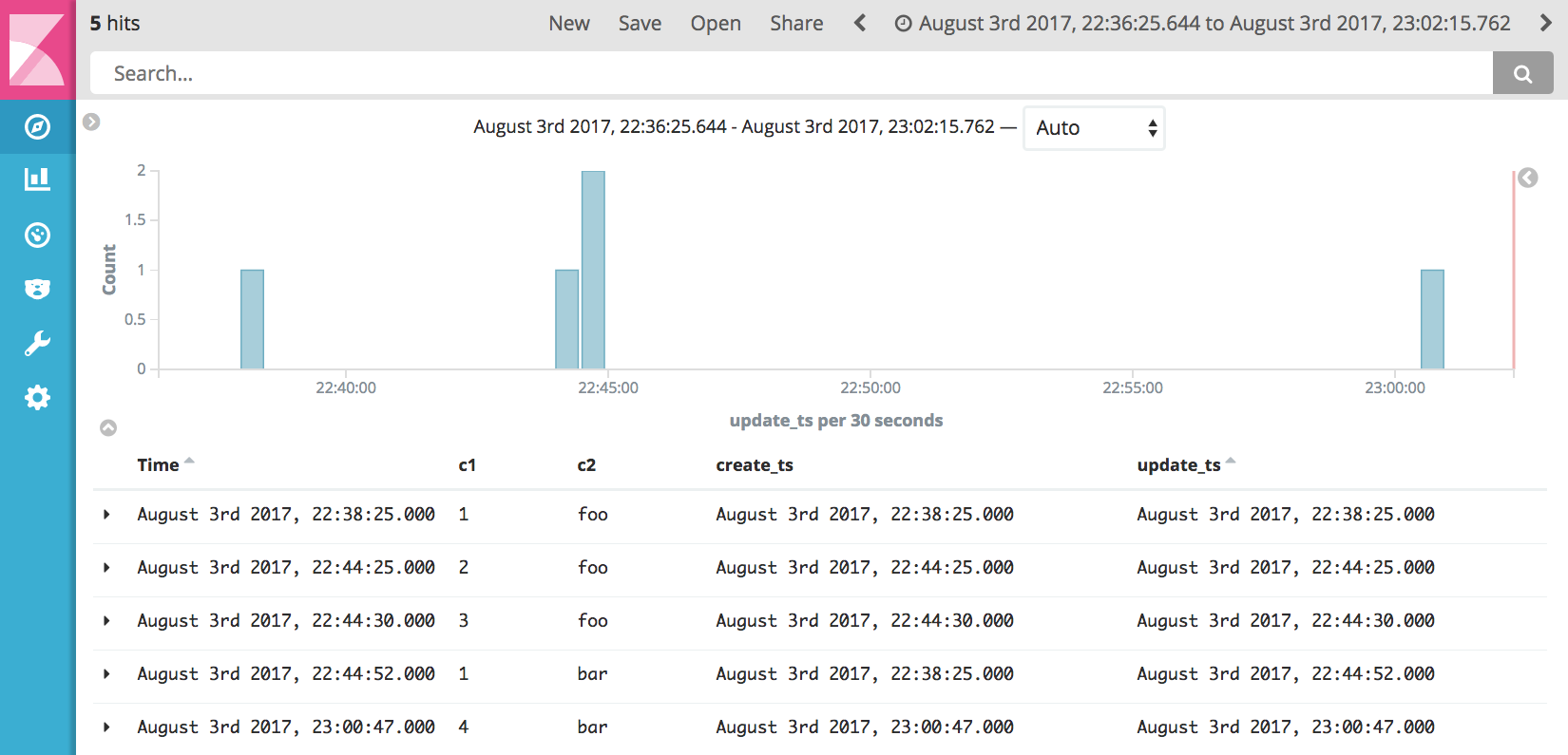

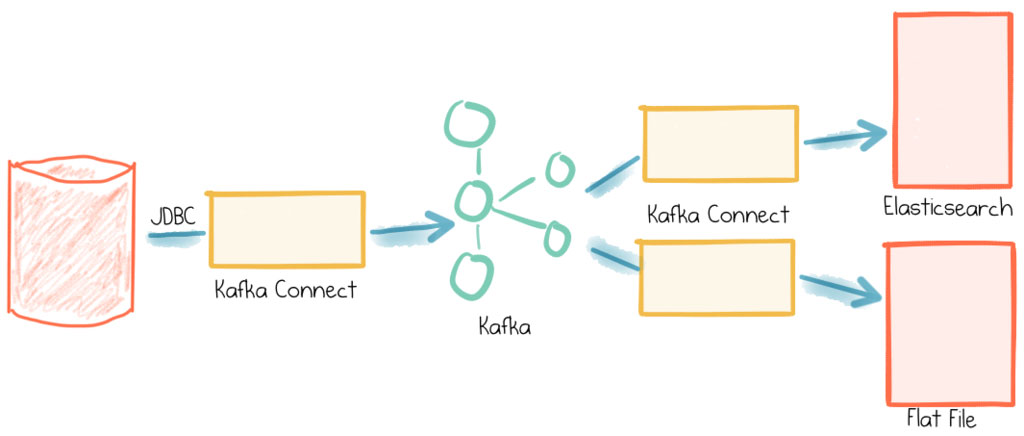

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 1

This short series of articles is going to show you how to stream data from a database (MySQL) into Apache Kafka® and from Kafka into both a text file and Elasticsearch—all […]