Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Announcing Confluent Cloud: Apache Kafka® as a Service

Today, I’m really excited to announce Confluent CloudTM, Apache Kafka® as a Service: the simplest, fastest, most robust and cost effective way to run Apache Kafka in the public cloud. […]

The 2017 Apache Kafka Survey: Streaming Data on the Rise

In Q1, Confluent conducted a survey of the Apache Kafka® community and those using streaming platforms to learn about their application of streaming data. This is our second execution of […]

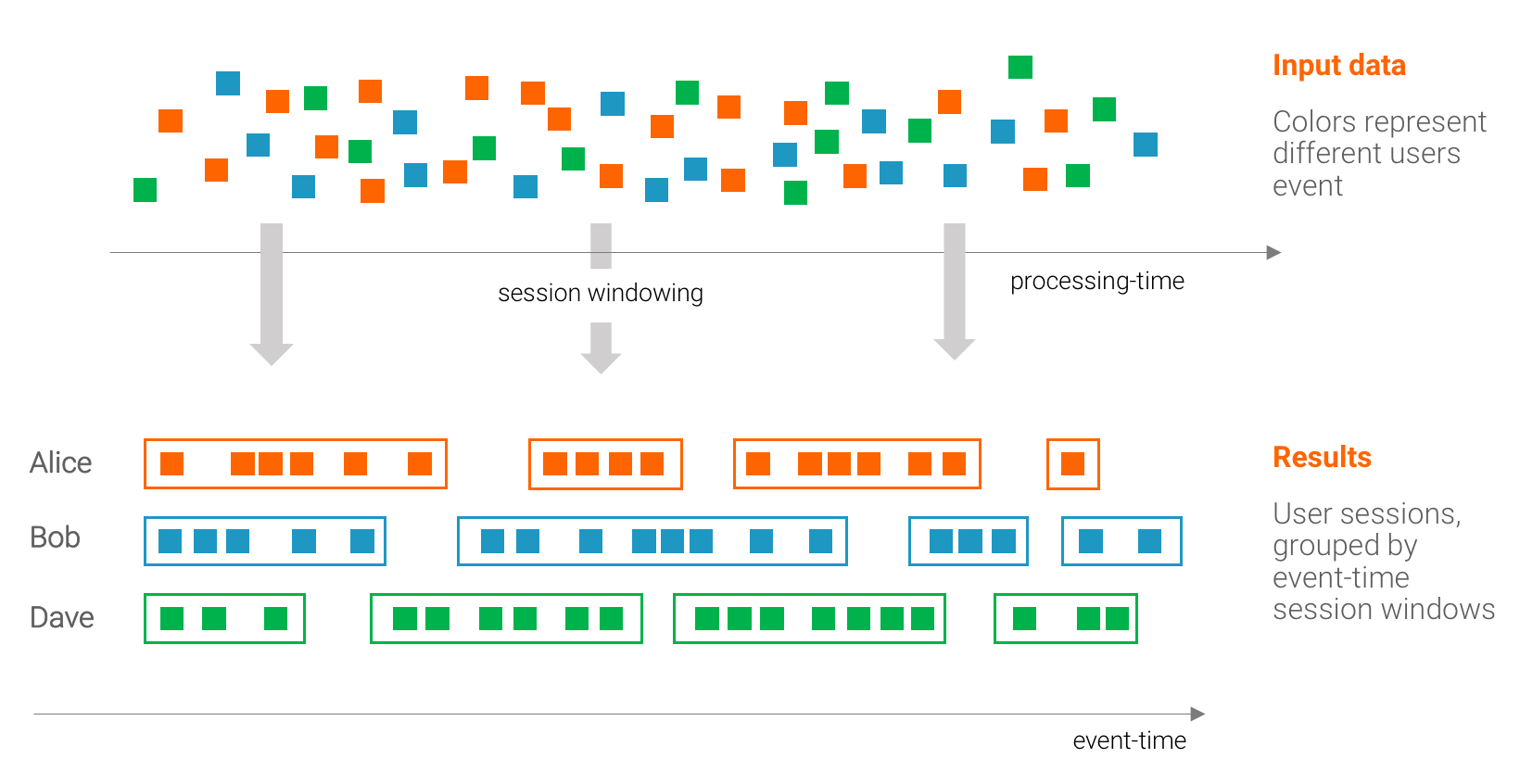

Watermarks, Tables, Event Time, and the Dataflow Model

The Google Dataflow team has done a fantastic job in evangelizing their model of handling time for stream processing. Their key observation is that in most cases you can’t globally […]

Stories from the Front: Lessons Learned from Supporting Apache Kafka®

Here at Confluent, our goal is to ensure every company is successful with their streaming platform deployments. Oftentimes, we’re asked to come in and provide guidance and tips as developers […]

Creating a Data Pipeline with the Kafka Connect API – from Architecture to Operations

Pandora began adoption of Apache Kafka® in 2016 to orient its infrastructure around real-time stream processing analytics. As a data-driven company, we have a several thousand node Hadoop clusters with hundreds of Hive tables critical to Pandora’s operational and reporting success...

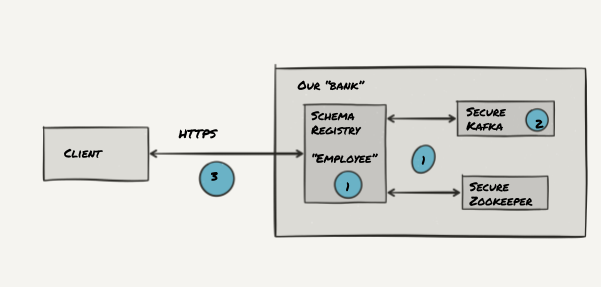

Securing the Confluent Schema Registry for Apache Kafka®

Note: The blog post Ensure Data Quality and Data Evolvability with a Secured Schema Registry contains more recent information. If you use Apache Kafka to integrate and decouple different data […]

Log Compaction – Highlights in the Apache Kafka® and Stream Processing Community – March 2017

Big news this month! First and foremost, Confluent Platform 3.2.0 with Apache Kafka® 0.10.2.0 was released! Read about the new features, check out all 200 bug fixes and performance improvements […]

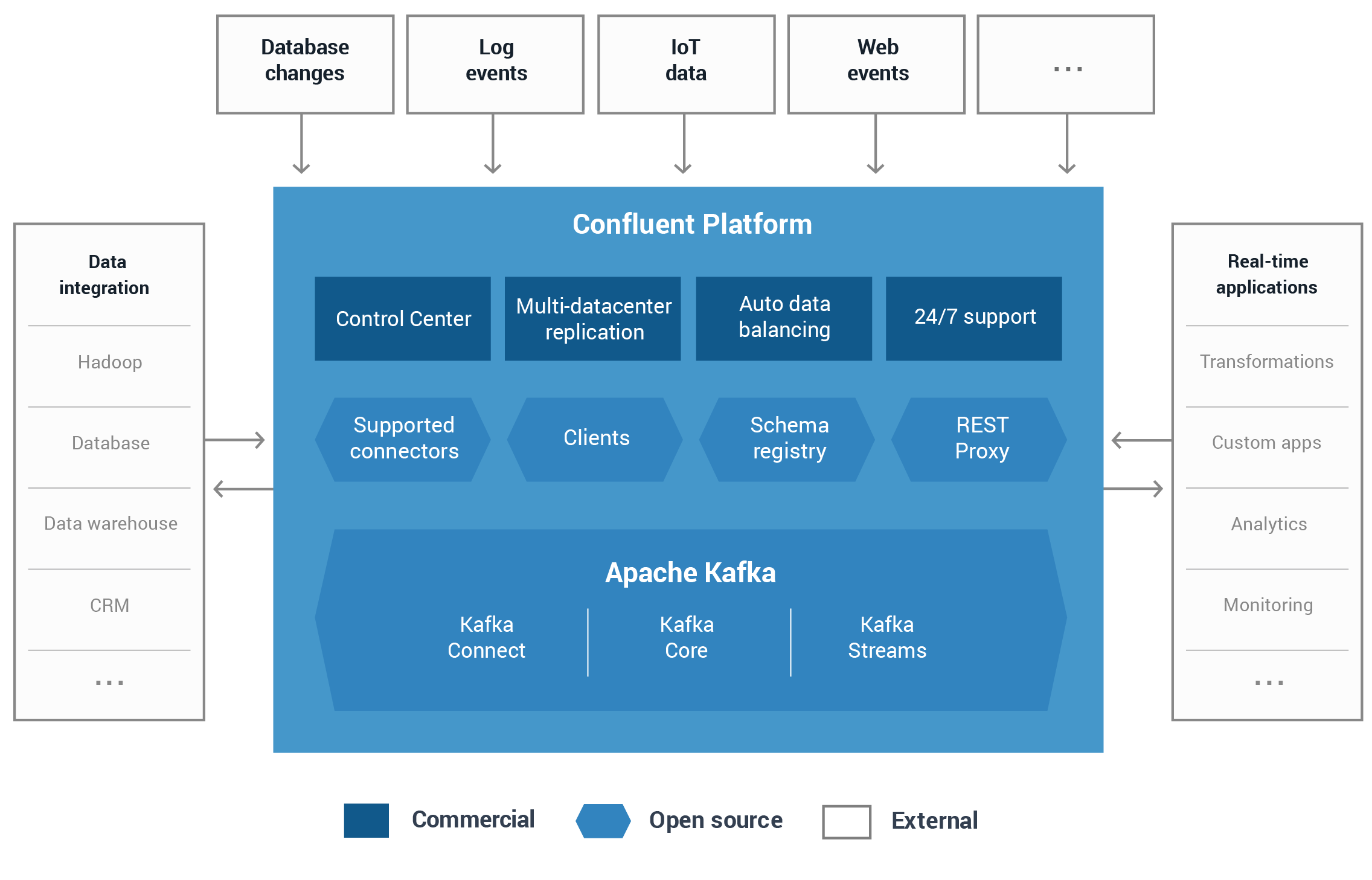

Confluent 3.2 with Apache Kafka® 0.10.2 Now Available

We’re excited to announce the release of Confluent 3.2, our enterprise streaming platform built on Apache Kafka. At Confluent, our vision is to provide a comprehensive, enterprise-ready streaming platform that […]

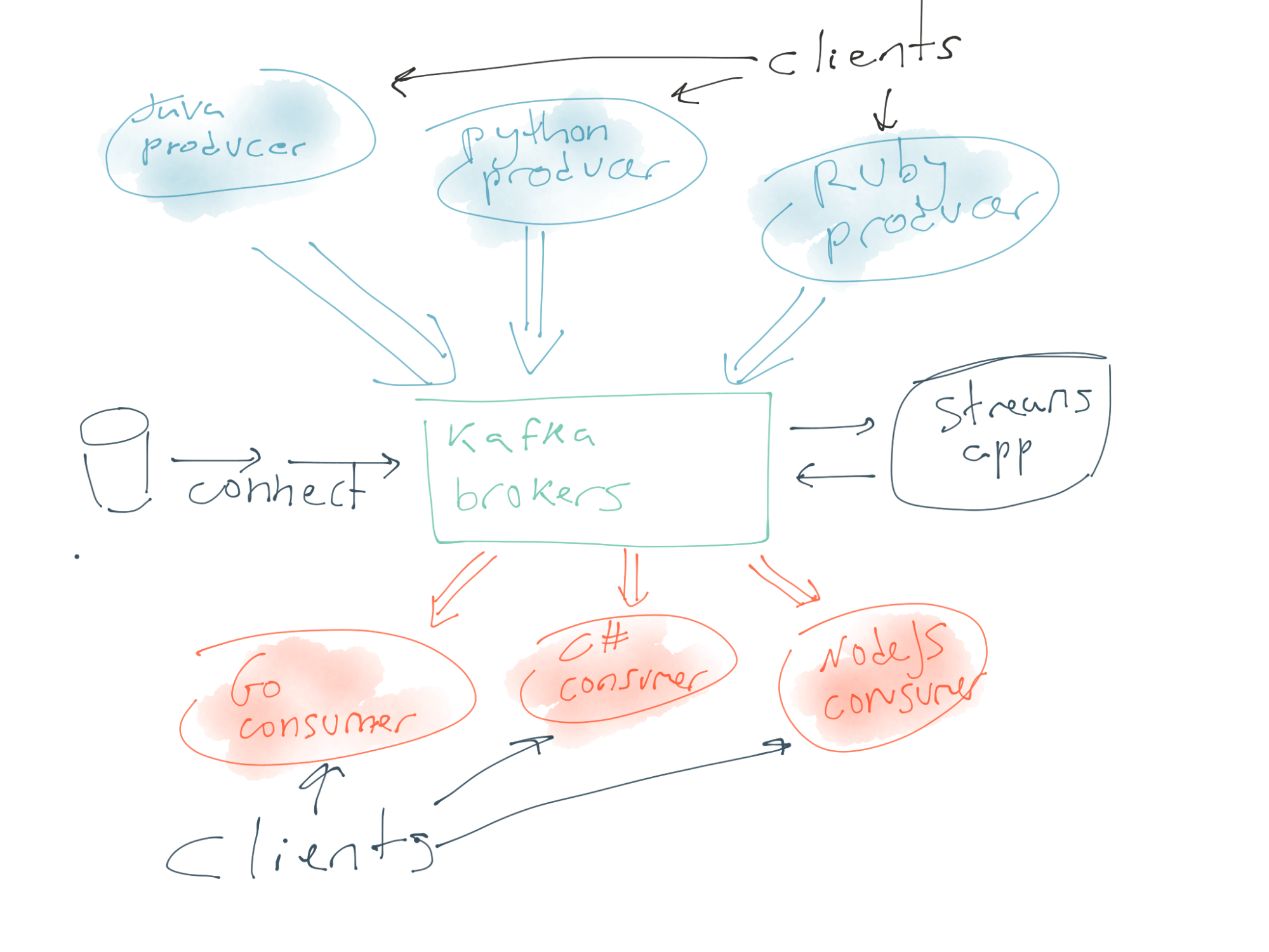

The First Annual State of Apache Kafka® Client Use Survey

At the end of 2016 we conducted a survey of the Apache Kafka® community regarding their use of Kafka clients (the producers and consumers used with Kafka) and their priorities […]

Log Compaction – Highlights in the Apache Kafka and Stream Processing Community – February 2017

As always, we bring you news, updates and recommended content from the hectic world of Apache Kafka® and stream processing. Sometimes it seems that in Apache Kafka every improvement is […]

Confluent Delivers Upgrades to Clients, The streams API in Kafka, Brokers and Apache Kafka™ 0.10.1.1

Last November, we released Confluent 3.1, with new connectors, clients, and Enterprise features. Today, we’re pleased to announce Confluent 3.1.2, a patch release which incorporates the latest stable version of […]

Apache Kafka: Getting Started

Seems that many engineers have “Learn Kafka” on their new year resolution list. This isn’t very surprising. Apache Kafka is a popular technology with many use-cases. Armed with basic Kafka […]

Log Compaction: Highlights in the Apache Kafka and Stream Processing Community – January 2017

Happy 2017! Wishing you a wonderful year full of fast and scalable data streams. Many things have happened since we last shared the state of Apache Kafka® and the streams […]

The Data Dichotomy: Rethinking the Way We Treat Data and Services

If you were to stumble upon the whole microservices thing, without any prior context, you’d be forgiven for thinking it a little strange. Taking an application and splitting it into fragments, […]