Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Author: Robin Moffatt

Introducing the Current 2022 Program Committee

The Call for Papers for Current 2022: The Next Generation of Kafka Summit is in full swing, with speakers from around the data streaming world putting their best talks forward […]

Current 2022: How to Become a Speaker

At this year’s Kafka Summit London we announced Current 2022: The Next Generation of Kafka Summit. This is a technical conference for everything data in motion. Current 2022 will take […]

Kafka Summit London 2022: The Full Recap

It’s official: Kafka Summit is back! Technically, it never went away—it just went online. But this week in London, Kafka Summit returned in all its glory to welcome over 1,200 […]

Kafka Summit London 2022: Welcoming the Apache Kafka Community Back to In-Person Events!

In just a few weeks’ time, the Apache Kafka® community will be convening for Kafka Summit London 2022—its first in-person event in over two years. The conference is being held […]

Detecting Patterns of Behaviour in Streaming Maritime AIS Data with Confluent

This is part two in a blog series on streaming a feed of AIS maritime data into Apache Kafka® using Confluent and how to apply it for a variety of […]

Streaming ETL and Analytics on Confluent with Maritime AIS Data

One of the canonical examples of streaming data is tracking location data over time. Whether it’s ride-sharing vehicles, the position of trains on the rail network, or tracking airplanes waking […]

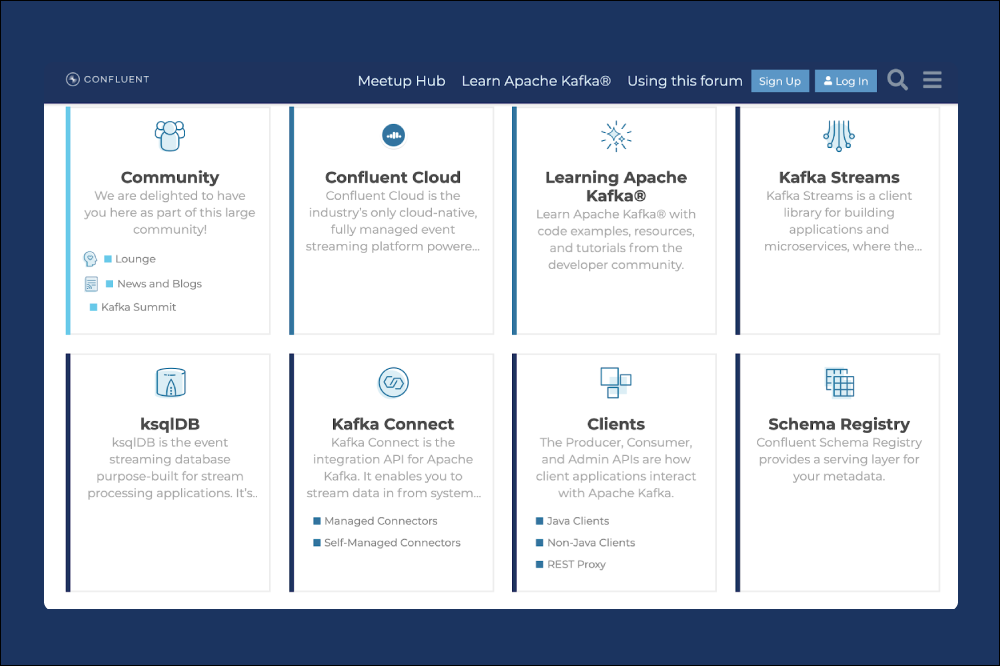

Announcing the Confluent Community Forum

Today, we’re delighted to launch the Confluent Community Forum. Built on Discourse, a platform many developers will already be familiar with, this new forum is a place for the community […]

Analysing Historical and Live Data with ksqlDB and Elastic Cloud

Building data pipelines isn’t always straightforward. The gap between the shiny “hello world” examples of demos and the gritty reality of messy data and imperfect formats is sometimes all too […]

My Python/Java/Spring/Go/Whatever Client Won’t Connect to My Apache Kafka Cluster in Docker/AWS/My Brother’s Laptop. Please Help!

When a client wants to send or receive a message from Apache Kafka®, there are two types of connection that must succeed: The initial connection to a broker (the […]

Learning All About Wi-Fi Data with Apache Kafka and Friends

Recently, I’ve been looking at what’s possible with streams of Wi-Fi packet capture (pcap) data. I was prompted after initially setting up my Raspberry Pi to capture pcap data and […]

Building a Telegram Bot Powered by Apache Kafka and ksqlDB

Imagine you’ve got a stream of data; it’s not “big data,” but it’s certainly a lot. Within the data, you’ve got some bits you’re interested in, and of those bits, […]

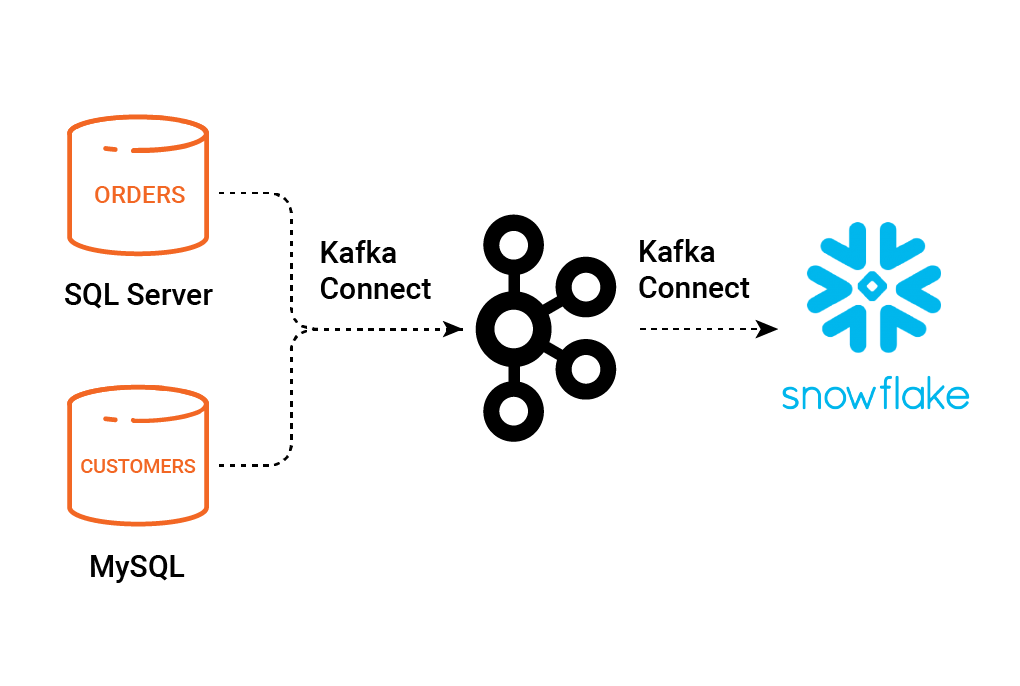

Pipeline to the Cloud – Streaming On-Premises Data for Cloud Analytics

This article shows how you can offload data from on-premises transactional (OLTP) databases to cloud-based datastores, including Snowflake and Amazon S3 with Athena. I’m also going to take the opportunity […]

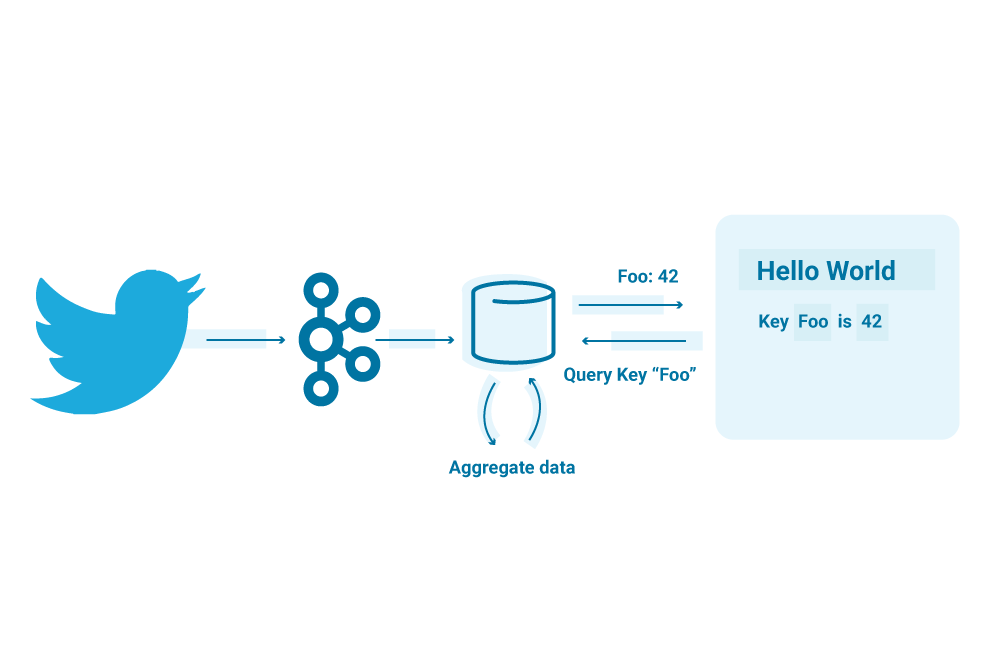

Exploring ksqlDB with Twitter Data

When KSQL was released, my first blog post about it showed how to use KSQL with Twitter data. Two years later, its successor ksqlDB was born, which we announced this […]

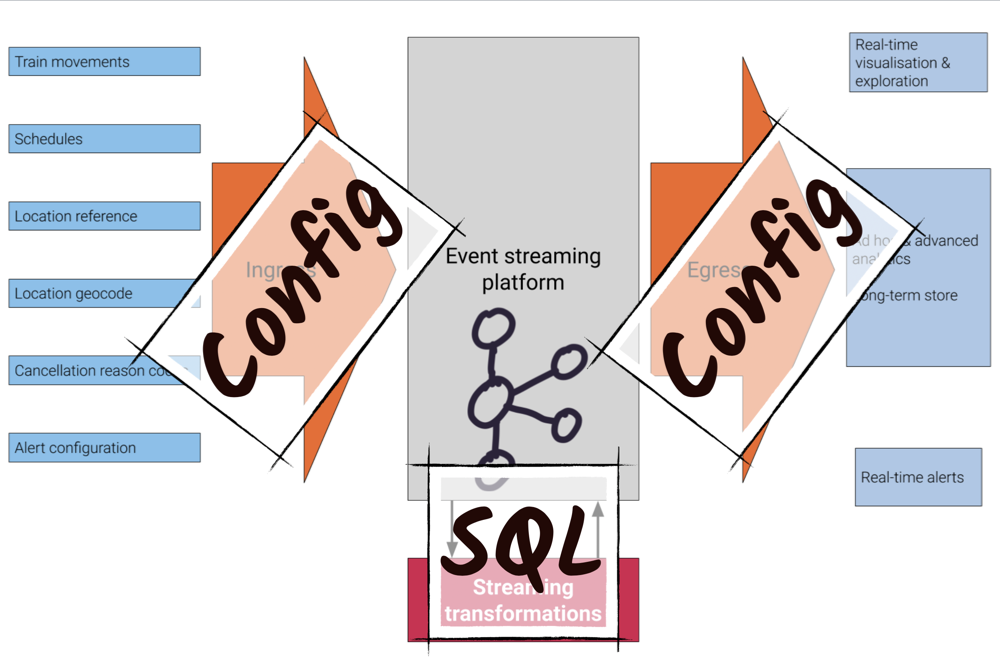

🚂 On Track with Apache Kafka – Building a Streaming ETL Solution with Rail Data

Trains are an excellent source of streaming data—their movements around the network are an unbounded series of events. Using this data, Apache Kafka® and Confluent Platform can provide the foundations […]