Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Apache Kafka

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Powering AI Agents with Real-Time Data Using Anthropic’s MCP and Confluent

Model Context Protocol (MCP), introduced by Anthropic, is a new standard that simplifies AI integrations by providing a secure and consistent way to connect AI agents with external tools and data sources…

How To Delete a Topic in Apache Kafka®: A Step-By-Step Guide

Learn how to delete topics in Apache Kafka safely and efficiently. Explore step-by-step instructions, best practices, and important considerations for managing Kafka topics.

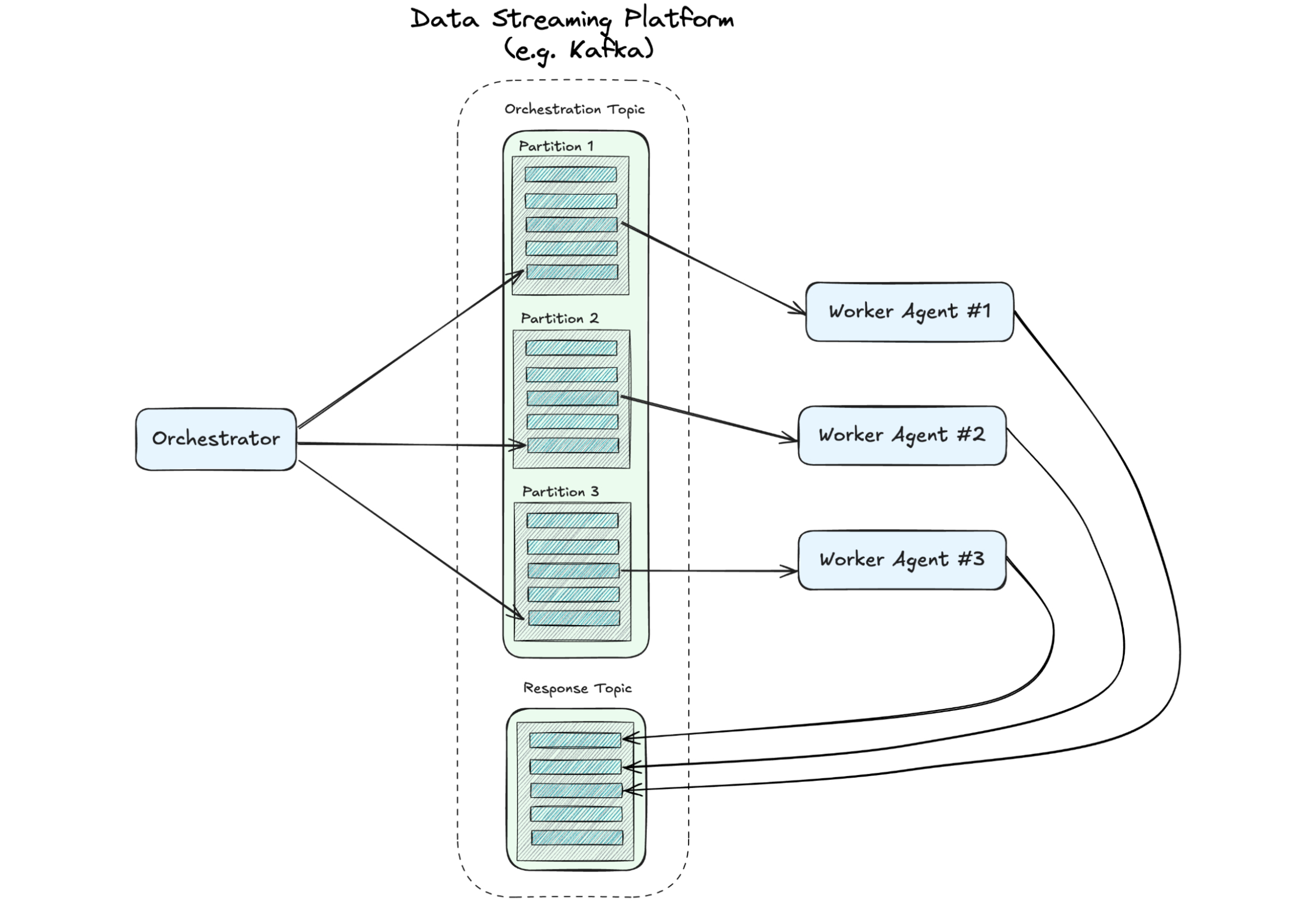

A Distributed State of Mind: Event-Driven Multi-Agent Systems

This article explores how event-driven design—a proven approach in microservices—can address the chaos, creating scalable, efficient multi-agent systems. If you’re leading teams toward the future of AI, understanding these patterns is critical. We’ll demonstrate how they can be implemented.

Building High Throughput Apache Kafka Applications with Confluent and Provisioned Mode for AWS Lambda Event Source Mapping (ESM)

Learn how to use the recently launched Provisioned Mode for Lambda’s Kafka ESM to build high throughput Kafka applications with Confluent Cloud’s Kafka platform. This blog also exhibits a sample scenario to activate and test the Provisioned Mode for ESM, and outline best practices.

Queues in Apache Kafka®: Enhancing Message Processing and Scalability

Adding queue support to Kafka opens up a world of new possibilities for users, making Kafka even more versatile. By combining the strengths of traditional queue systems with Kafka’s robust log-based architecture, customers now have a solution that can handle both streaming and queue processing.

Introducing Confluent’s JavaScript Client for Apache Kafka®

Confluent launches the general availability of a new JavaScript client for Apache Kafka®, a fully supported Kafka client for JavaScript and TypeScript programmers in Node.js environments.

Deep Dive into Handling Consumer Fetch Requests: Kafka Producer and Consumer Internals, Part 4

Dive into the inner workings of brokers as they serve data up to a consumer.

Introducing Apache Kafka® 3.9

We are proud to announce the release of Apache Kafka 3.9.0. This is a major release, the final one in the 3.x line. This will also be the final major release to feature the deprecated Apache ZooKeeper® mode. Starting in 4.0 and later, Kafka will always run without ZooKeeper.

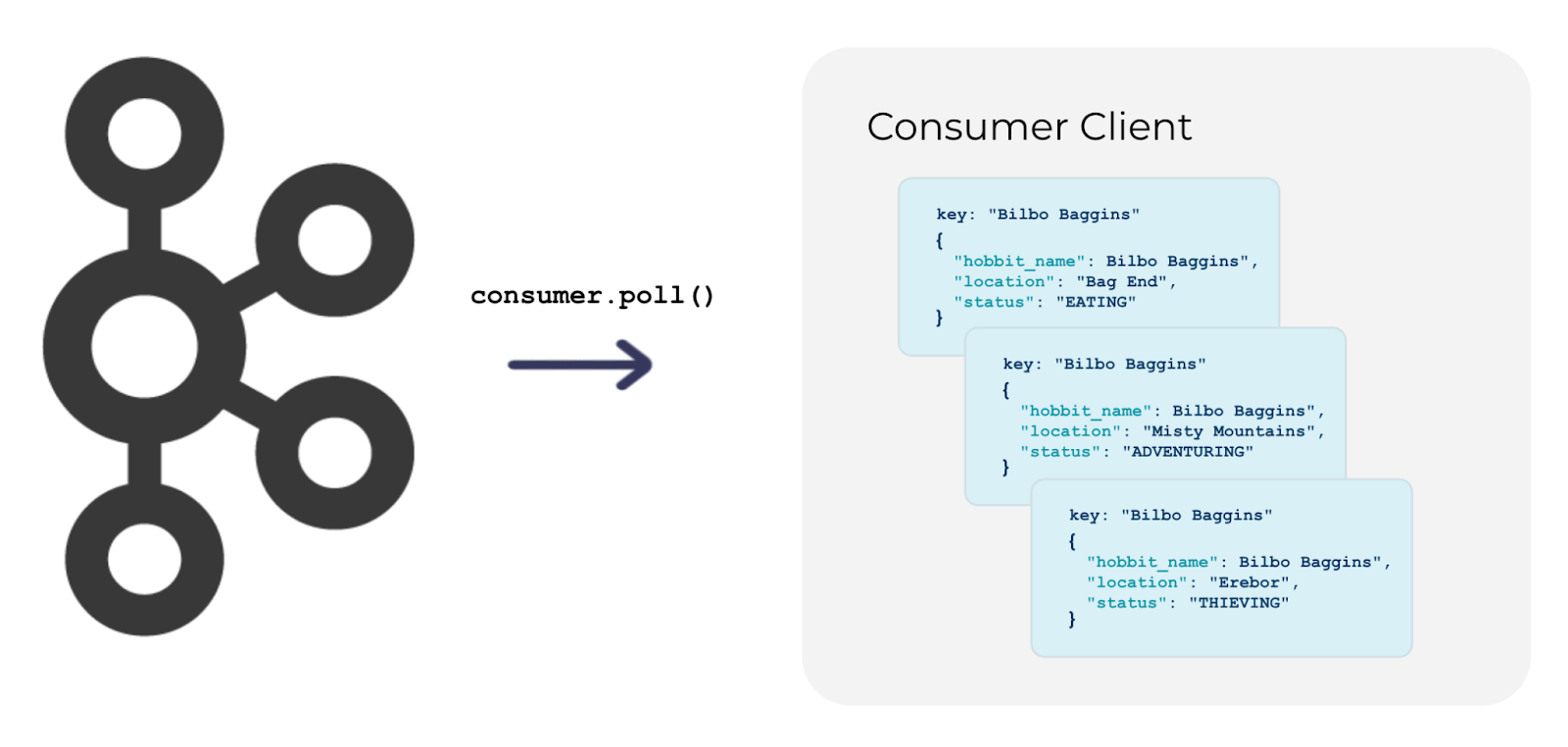

Preparing the Consumer Fetch: Kafka Producer and Consumer Internals, Part 3

In this third installment of a blog series examining Kafka Producer and Consumer Internals, we switch our attention to Kafka consumer clients, examining how consumers interact with brokers, coordinate their partitions, and send requests to read data from Kafka topics.

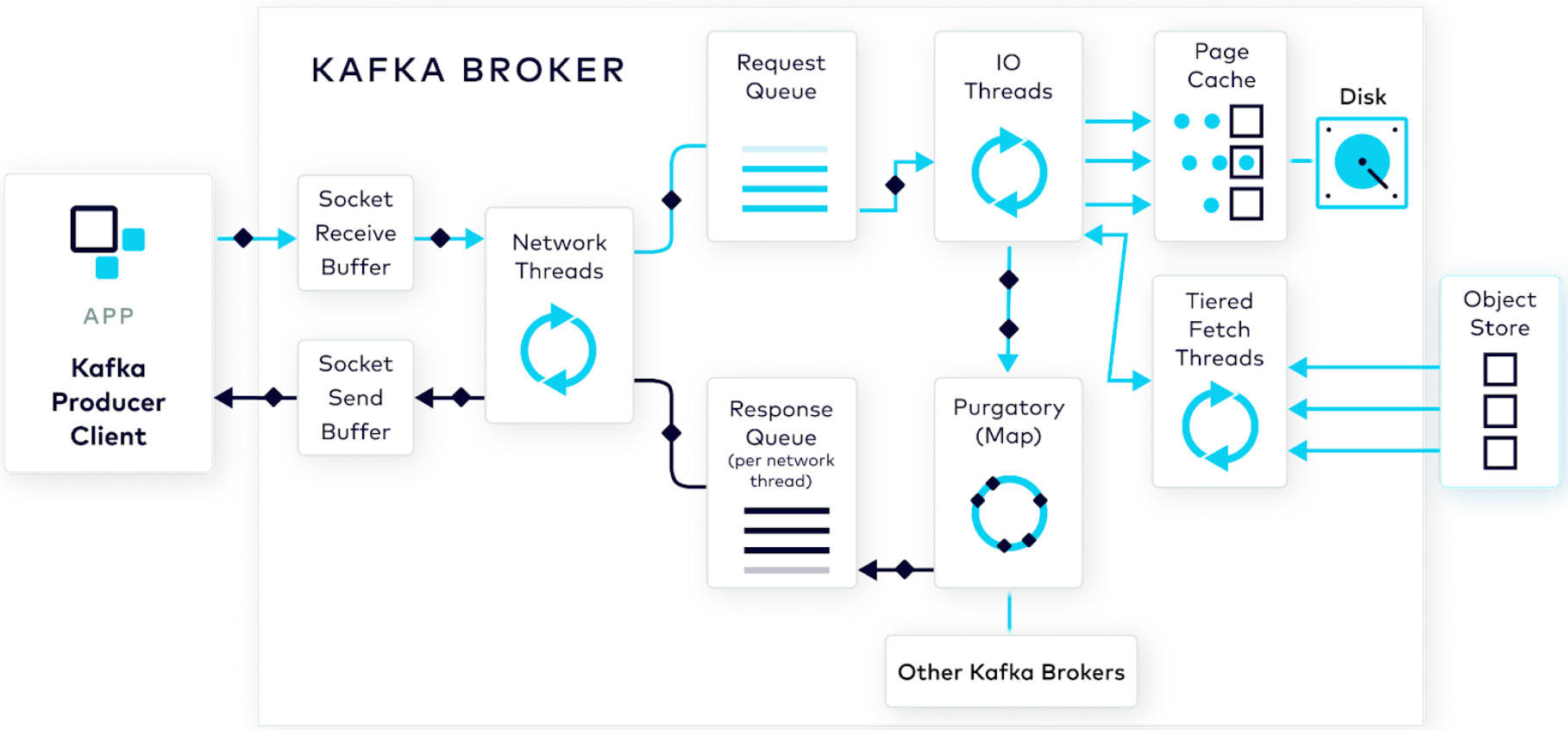

Handling the Producer Request: Kafka Producer and Consumer Internals, Part 2

In this post, the second in the Kafka Producer and Consumer Internals Series, we follow our brave hero—a well-formed produce request—which is on its way to be processed by the broker and have its data stored on the cluster.

How Producers Work: Kafka Producer and Consumer Internals, Part 1

The beauty of Kafka as a technology is that it can do a lot with little effort on your part. In effect, it’s a black box. But what if you need to see into the black box to debug something? This post shows what the producer does behind the scenes to help prepare your raw event data for the broker.

Introducing Apache Kafka® 3.8

We are proud to announce the release of Apache Kafka 3.8.0. This release contains many new features and improvements. This blog post highlights some of the more prominent features. For a full list of changes, be sure to check the release notes.

Building a Full-Stack Application With Kafka and Node.js

A well-known debate: tabs or spaces? Let’s settle the debate, Kafka-style. We’ll use the new confluent-kafka-javascript client to build an app that produces the current state of the vote counts to a Kafka topic and consumes from that same topic to surface them to a JavaScript frontend.