Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Confluent

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

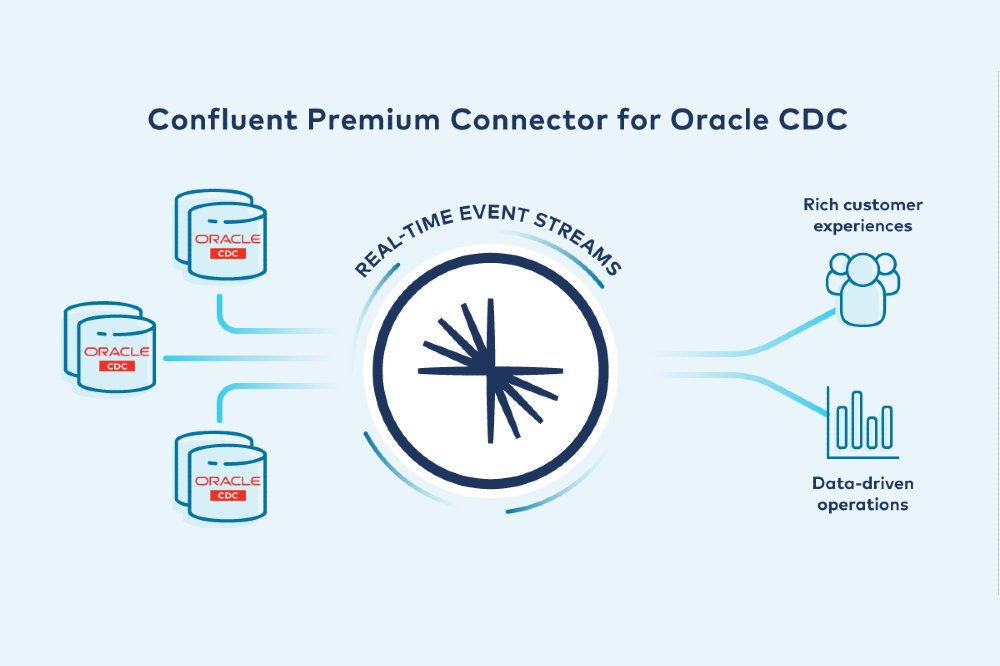

Oracle CDC Source Premium Connector is Now Generally Available

One of the most common relational database systems that connects to Apache Kafka® is Oracle, which often holds highly critical enterprise transaction workloads. While Oracle Database (DB) excels at many […]

Automatic Observer Promotion Brings Fast and Safe Multi-Datacenter Failover with Confluent Platform 6.1

Persisting data in multiple regions has become crucial for modern businesses: They need their mission-critical data to be protected from accidents and disasters. They can achieve this goal by running […]

Introducing Confluent Platform 6.1

We are pleased to announce the release of Confluent Platform 6.1. With this release, we are further simplifying management tasks for Apache Kafka® operators and providing even higher availability for […]

How to Consume Data from Apache Kafka Topics and Schema Registry with Confluent and Azure Databricks

How do you process IoT data, change data capture (CDC) data, or streaming data from sensors, applications, and sources in real time? Apache Kafka® and Apache Spark® are widely adopted […]

Event Streaming Across Networks and Corporate Firewalls Using PubNub and Confluent Platform

This year’s pandemic has forced businesses all around the world to adopt a “remote-first” approach to their operations, with an emphasis on better enabling collaboration, remote work, and productivity. This […]

Property Based Testing Confluent Cloud Storage for Fun and Safety

Confluent uses property-based testing to test various aspects of Confluent Cloud’s Tiered Storage feature. Tiered Storage shifts data from expensive local broker disks to cheaper, scalable object storage, thereby reducing […]

Putting Apache Kafka to REST: Confluent REST Proxy 6.0

Confluent Platform 6.0 was released last year bringing with it many exciting new features to Confluent REST Proxy. Before we dive into what was added, let’s first revisit what REST […]

Introducing the Confluent Parallel Consumer

Consuming messages in parallel is what Apache Kafka® is all about, so you may well wonder, why would we want anything else? It turns out that, in practice, there are […]

Getting Started with Spring Cloud Data Flow and Confluent Cloud

Data is the currency of competitive advantage in today’s digital age. All organizations struggle with their data due to the sheer variety of data types and ways that it can […]

Ensure Data Quality and Data Evolvability with a Secured Schema Registry

Organizations define standards and policies around the usage of data to ensure the following: Data quality: Data streams follow the defined data standards as represented in schemas Data evolvability: Schemas […]

Project Metamorphosis Month 8: Complete Apache Kafka in Confluent Cloud

This is the eighth and final month of Project Metamorphosis: an initiative that brings the best characteristics of modern cloud-native data systems to the Apache Kafka® ecosystem, served from Confluent […]

Real-Time Serverless Ingestion, Streaming, and Analytics using AWS and Confluent Cloud

Due to the distributed architecture of Apache Kafka®, the operational burden of managing it can quickly become a limiting factor on adoption and developer agility. For this reason, it is […]

The Cloud-Native Evolution of Apache Kafka on Kubernetes

It’s almost KubeCon! Let’s talk about the state of cloud-native Apache Kafka® and other distributed systems on Kubernetes. Over the last decade, our industry has seen the rise of container […]

Self-Describing Events and How They Reduce Code in Your Processors

Have you ever had to write a program that needed to handle any data payload that could be thrown at you? If so, did you always have to update the […]