Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Introducing Confluent Platform 6.0

Each month, we’ve announced a set of Confluent features organized around what we think are the key foundational traits of cloud-native data systems as part of Project Metamorphosis. Data systems built for the cloud are different in significant ways, and the experience of using these systems is simply much, much better. We want to bring that cloud-native Apache Kafka® experience through both Confluent Cloud and Confluent Platform.

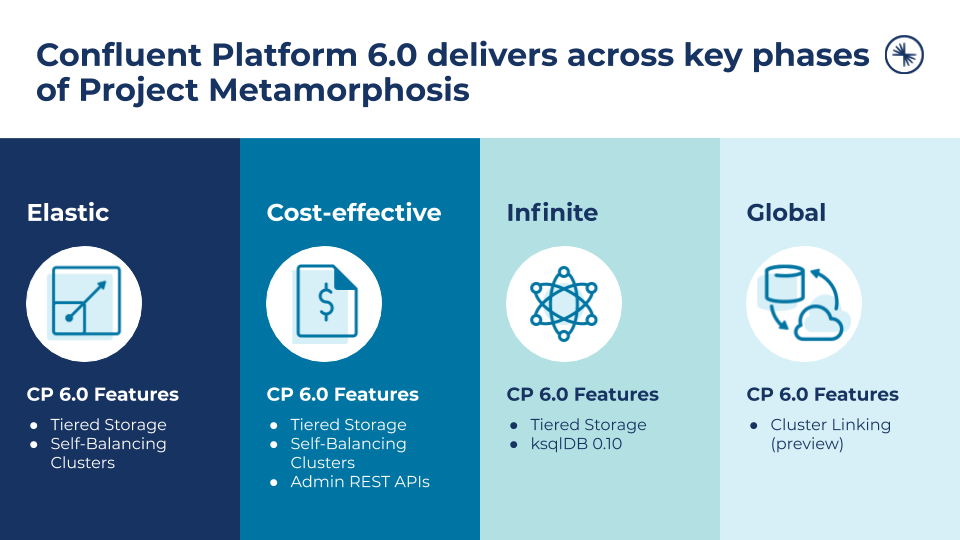

Today, we are pleased to announce the release of Confluent Platform 6.0. With this release, Confluent delivers across key phases of Project Metamorphosis, enhancing Kafka with greater elasticity, improved cost effectiveness, infinite data retention, and global availability.

- Elastic: Scale up Kafka to meet any event streaming workload and scale it back down to avoid over-provisioning infrastructure using Self-Balancing Clusters and Tiered Storage

- Cost Effective: Reduce your total cost of ownership (TCO) of self-managing Kafka up to 40% with a smaller infrastructure footprint, reduced operational burden, and minimized downtime by leveraging Self-Balancing Clusters, Tiered Storage, Admin REST APIs, and more

- Infinite: Transform Kafka into your central nervous system for all events by enabling infinite data retention with Tiered Storage and simplified stream processing with ksqlDB

- Global: Easily deploy hybrid cloud and multi-cloud Kafka clusters using Cluster Linking to allow your data to exist wherever your business needs it

Download Confluent Platform 6.0

This blog post walks through the key benefits of several new features and how these features complement one another to deliver on the cloud-native themes from Project Metamorphosis. As with any Confluent Platform release, the full host of details are in the release notes, but this post provides a high-level overview of what’s included.

What’s new in Confluent Platform 6.0

Confluent Platform 6.0 is one of our most feature-rich releases to date. These features simplify management operations and reduce the cost of adopting Kafka, enabling you to instead focus on building event streaming applications that change your businesses. There are five features in particular that I’d like to highlight:

For a summary of these features, check out the video below:

Tiered Storage enables infinite data retention and elastic scalability

As organizations mature in their event streaming journey with more mission-critical systems and event streaming applications connecting to Kafka, the platform requires storing larger amounts of data for longer periods of time. Furthermore, the platform’s workload can change dynamically, so it is also important that compute and storage resources can be scaled quickly and independently of one another.

With Confluent Platform 6.0, we are excited to announce the general availability of Tiered Storage. Tiered Storage allows Kafka to recognize two tiers of storage: local disks and cost-effective object stores, such as Amazon S3 and Google Cloud Storage. As data ages on the local disk, brokers are now able to offload it to object storage while retaining performant access to the data. With Tiered Storage, you no longer face a trade-off between retaining more data over longer periods of time and controlling your infrastructure costs.

Tiered Storage delivers three primary benefits that revolutionize the way you experience Kafka:

- Infinite retention: If you want to retain very large amounts of data for long periods of time, Tiered Storage allows you to do so in a low-cost fashion, enabling virtually infinite retention. Infinite retention allows you to completely reimagine what event streaming applications can do, as you can now build applications that leverage both real-time and historical events through Kafka.

- Platform elasticity and faster data balancing: With Tiered Storage, you can scale compute without having to scale storage, and vice versa. When scaling compute, rebalancing data becomes orders of magnitude faster, because new brokers simply point to data in the storage layer without having to move any of it from one broker to another. It’s similar for scaling storage when retention requirements increase. This delivers a fundamentally higher level of elasticity for the event streaming platform.

- Reduced infrastructure costs: Tiered Storage can help dramatically reduce the infrastructure footprint of Kafka, regardless of whether you need to retain data for longer periods of time. Rather than paying for expensive local disks, you can offload the bulk of Kafka storage to a more cost-effective storage tier, leading to significant savings.

For those who want to make Kafka the centralized system of record for all events in their organization, Tiered Storage enables you to do so with a significantly lower operational and cost burden.

Self-Balancing Clusters improve Kafka’s performance, elasticity, and ease of operations

If you have managed a Kafka cluster before, you are likely familiar with the challenges that come with manually reassigning partitions to different brokers to ensure the workload is balanced across the cluster. For organizations with large Kafka deployments, reshuffling large amounts of data can be daunting, tedious, and risky, especially if mission-critical applications are built on top of the cluster. But even for the smallest Kafka use cases, the process is time consuming and prone to human error.

That’s why we’re introducing Self-Balancing Clusters as part of Confluent Platform 6.0. With Self-Balancing Clusters, partition rebalances are fully automated to optimize Kafka’s throughput, accelerate broker scaling, and reduce the operational burden of running a large cluster.

At steady state, Self-Balancing Clusters monitor the skew of resource utilization across the brokers and continuously reassign partitions to optimize cluster performance and balance on an ongoing basis. When scaling the platform up or down, Self-Balancing Clusters automatically recognize the presence of new brokers or the removal of old brokers and trigger a subsequent partition reassignment. This enables you to easily add and remove brokers, making your Kafka clusters even more elastic.

These benefits come without any need for manual intervention, complex math, or risk of human error that partition reassignments typically entail. As a result, data rebalances are completed in far less time, and you are free to focus on more high-value event streaming projects rather than needing to constantly supervise your clusters.

To learn more about Self-Balancing Clusters, you can read this blog post.

ksqlDB makes pull queries and embedded connectors generally available

More than ever, businesses are turning to Kafka to build event streaming applications that offer exceptional, real-time experiences to their customers. Unfortunately, event streaming applications typically require a heavyweight architecture that integrates Kafka with several additional distributed systems for event capture, stream processing, and serving point-in-time queries. The parts do not fit together as well as you’d hope; these systems are complex, and each integration is a non-trivial project. As a result, building architectures to support event streaming applications remains complicated, despite their benefit to customers.

Last year, we introduced two major features to ksqlDB in preview: pull queries and embedded connectors. These features transformed the product from a SQL-based stream processor to an event streaming database, a new type of database that simplifies these stream processing architectures and makes it easy to build event streaming applications. Today, we are excited to announce that both features are generally available in Confluent Platform 6.0. With support for pull queries and embedded connectors, the complex architecture once required to build event streaming applications is reduced to two components: Kafka and ksqlDB.

Pull queries offer point-in-time lookups on materialized tables from a stream of data, similar to SELECT statements in a traditional database. They complement push queries, which allow applications to subscribe to query results as soon as they change. Taking a ride-sharing application as an example, pull queries could look up a driver’s rating at a point in time, while push queries could show the driver’s location as their location changes.

ksqlDB also supports running connectors in an embedded setting, meaning connectors can run directly on ksqlDB servers. You can easily work with external data sources using Confluent’s 100+ pre-built connectors directly through ksqlDB.

With ksqlDB handling connector management, stream processing, and point-in-time lookups, you can build event streaming applications with fewer moving parts in the underlying architecture. A complete, end-to-end event streaming application that changes your business can now be built with just a few simple SQL statements.

Confluent Platform 6.0 introduces REST APIs for administrative operations

To effectively manage your clusters, you need flexible ways to perform administrative operations. That’s why Confluent Platform offers several alternatives to perform administrative operations, including Confluent Control Center, the Confluent CLI, and the Kafka clients. However, you might prefer a RESTful interface for performing administrative operations because of the simplicity and language-agnostic nature of REST APIs.

In Confluent Platform 6.0, we are expanding your options for how you choose to manage Kafka by adding REST APIs for a comprehensive set of administrative operations, including:

- Describe, list, and configure brokers

- Create, delete, describe, list, and configure topics

- Delete, describe, and list consumer groups

- Create, delete, describe, and list ACLs

- List partition reassignments

You can now use REST APIs to perform the same administrative operations as Control Center, the Confluent CLI, or the Kafka clients. And with existing REST APIs for producing and consuming messages, Confluent Platform provides you with a complete RESTful interface to your cluster that can perform all the same functionality as the Kafka clients.

Cluster Linking simplifies hybrid cloud and multi-cloud deployments for Kafka

Hybrid cloud and multi-cloud strategies enable businesses to leverage best-of-breed solutions, reduce infrastructure costs, and increase developer agility. However, connecting Kafka clusters between different environments and across long distances remains operationally complex, as topic offsets are not replicated byte for byte as one would hope. As such, many are limited to isolated clusters for each environment, making it difficult to create a consistent data plane across an organization.

Confluent Platform 6.0 introduces Cluster Linking in preview state to connect independent Kafka clusters in a simple, efficient manner. Rather than siloing individual clusters, Cluster Linking ensures Kafka data exists wherever it is needed by replicating data without needing to deploy any additional nodes to the architecture. If you embrace hybrid cloud and multi-cloud architectures, or if you are pursuing use cases that span the globe, Kafka can bridge clusters between different environments and across any distance to maintain its place as your organization’s central nervous system.

Cluster Linking also offers a cost-effective, secure, and performant transport layer between public cloud providers. Because Kafka retains messages that can be read by unlimited consumers, data can be replicated once from one cloud provider to another using Cluster Linking and then be read by several applications in that new datacenter. This saves substantial networking costs and greatly improves performance over having several applications share data across different cloud providers.

Finally, Cluster Linking provides a way for you to migrate entire clusters across different environments, whether from on premises to the cloud or between cloud providers. Cluster Linking fully preserves the offsets of messages within a topic, meaning consumers reading from a topic in one environment can start reading from the same topic in a different environment without the risk of reprocessing or skipping critical messages. This makes it far easier and less risky to migrate clusters to the cloud or between cloud providers. And for organizations looking to offload cluster management to the world’s foremost Kafka experts, Cluster Linking provides a simple solution to start migrating workloads over to our fully managed service, Confluent Cloud.

| ℹ️ | As with any preview feature, Cluster Linking is not yet supported for production environments and is only advised for testing purposes. Changes may be made before we reach general availability with this feature. |

To learn more about Cluster Linking, you can read this blog post.

How these features deliver on Project Metamorphosis

The new features in Confluent Platform 6.0 are tremendously valuable on their own, but isolating the value of each doesn’t tell the whole story. These features complement one another in ways that transform Kafka into a more cloud-native data system that has many of the attributes we have highlighted during Project Metamorphosis: greater elasticity, improved cost effectiveness, infinite data retention, and global availability.

Scaling elastically

Cloud-native data systems are expected to scale up as demand peaks and scale down as demand falls, removing the need for detailed capacity planning while ensuring businesses can meet the real-time expectations of customers. The process of scaling Kafka is not without its challenges though. Each step can be quite manual, and partitions need to be rebalanced across the brokers to realize the intended performance benefits of scaling the platform.

Tiered Storage and Self-Balancing Clusters enable you to scale Kafka with greater elasticity. Both of these features are also fully compatible with Confluent Operator, which simplifies deploying and running Kafka as a cloud-native system on Kubernetes.

With Self-Balancing Clusters, the process of reassigning partitions after scaling up the number of brokers in a cluster is abstracted away. Self-Balancing Clusters automatically recognize the presence of new brokers and trigger a subsequent partition reassignment. This means that scaling the platform is as easy as adding additional brokers (which only takes a single command with Confluent Operator).

The time needed to complete a partition rebalance is also significantly shortened with Tiered Storage. Because Tiered Storage offloads older data to object storage, partition replicas hold less data on the broker itself. As a result, partition reassignments require less data to be shifted across the cluster, and adding brokers leads to more immediate performance benefits. Tiered Storage also decouples the compute and storage layers in Kafka, allowing for a more efficient scaling process. Rather than adding brokers as a means of adding more storage resources to a cluster, you can now scale up storage independent of compute, and vice versa.

Operating cost effectively

Now more than ever, businesses are evaluating how they can operate more efficiently. By leveraging Tiered Storage, Self-Balancing Clusters, and Admin REST APIs, you can significantly decrease your TCO for Kafka and the data architecture that underpins your business.

First, these features decrease Kafka’s infrastructure costs by enabling clusters to run on a smaller infrastructure footprint. Tiered Storage, in particular, greatly reduces costs by offloading older topic data from expensive local disks to inexpensive object storage. Self-Balancing Clusters furthers the savings by ensuring the data that remains on the brokers is evenly distributed across the cluster, optimizing the utilization of infrastructure resources.

The elastic scaling process supported by Tiered Storage and Self-Balancing Clusters results in even greater infrastructure savings. Clusters can scale up and scale down quickly depending on their workload in the moment. This way, businesses can avoid over-provisioning resources up front and only have to pay for infrastructure when they actually need it.

Second, these features reduce costs associated with lost productivity by making Kafka easier to manage. As previously mentioned, Tiered Storage and Self-Balancing Clusters alleviate the process of initial capacity planning, scaling the cluster, and conducting partition reassignments. Additionally, Admin REST APIs enable operators to be more productive by simplifying cluster management, saving hours of engineering time on these tedious, manual processes.

Tiered Storage, Self-Balancing Clusters, and Admin REST APIs complement many existing features in Confluent Platform that also decrease costs across a variety of categories and allows businesses to operate Kafka with greater efficiency. Cumulatively, these enterprise-grade features and services can help your business reduce TCO by up to 40%, thus covering the cost of a Confluent subscription many times over.

Storing infinite data

Customers expect relevant personalized experiences in real time when interacting with most business applications. Kafka was designed to serve these applications, collecting, storing, processing, and rerouting real-time event data at any scale. However, business decisions and customer experiences cannot rely solely on today’s data and need historical context in order to provide more accurate insights and better experiences. Previously, businesses were left with a difficult trade-off: They could use Kafka for both real-time and historical event data but faced significant infrastructure expenses and challenges getting to massive scale.

With Tiered Storage, you no longer face a trade-off between retaining more data over longer periods of time and controlling your infrastructure costs. Instead, you can retain data on Kafka infinitely, which introduces several new and exciting event streaming use cases:

- Build event streaming applications that leverage both real-time and historical events to provide better customer experiences and improved insights from a much broader set of data

- Create a single, centralized system of record for all events within your organization with a dramatically lower TCO and infrastructure footprint

- Train machine learning models using a historical stream of data and move them seamlessly to production to make real-time predictions with ksqlDB

- Achieve the regulatory requirements for data retention without needing to build additional infrastructure into your architecture

While Tiered Storage enables Kafka to retain infinite event data, ksqlDB offers a complementary solution to easily process those event streams. With its capacity to simplify stream processing architectures and its lightweight SQL-based syntax, ksqlDB can be used to enrich that event data and build complete event streaming applications. And with infinite retention, you also no longer have to worry about storage constraints when creating new streams and tables with ksqlDB.

Deploying globally

For businesses with global scale and 24×7 operations, data architectures need to bridge both on-premises datacenters and public cloud providers across different continents and need to provide high resiliency. Replication tools that do not fully preserve topic offsets only provide partial solutions though. For Kafka to act as the central nervous system of these organizations, we are investing in simplifying the process of deploying interconnected clusters that span the globe.

In Confluent Platform 5.4, we introduced Multi-Region Clusters to deploy a single Kafka cluster across multiple datacenters, ensuring higher availability and dramatically simplifying disaster recovery operations. Cluster Linking provides a complementary solution for connecting independent Kafka clusters to make event data ubiquitously and globally available throughout your organization.

By combining these two solutions, you can build the data architecture that best fits your business needs. You can deploy independent clusters across on-premises datacenters and public cloud providers and then seamlessly connect them via Cluster Linking, enabling topics to be shared across the globe. And for individual clusters that power mission-critical applications with high uptime SLAs, you can leverage Multi-Region Clusters to minimize cluster downtime with automated client failover during disaster events. Best of all, both of these solutions have no dependencies and fully preserve topic offsets.

Download Confluent Platform 6.0

Interested in more?

Check out Tim Berglund’s video summary or podcast for an overview of what’s new in Confluent Platform 6.0 and download Confluent Platform 6.0 to get started with a complete event streaming platform built by the original creators of Apache Kafka.

Did you like this blog post? Share it now

Subscribe to the Confluent blog

New with Confluent Platform 7.9: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and More

This blog announces the general availability of Confluent Platform 7.9 and its latest key features: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and more.

Meet the Oracle XStream CDC Source Connector

Confluent's new Oracle XStream CDC Premium Connector delivers enterprise-grade performance with 2-3x throughput improvement over traditional approaches, eliminates costly Oracle GoldenGate licensing requirements, and seamlessly integrates with 120+ connectors...