Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Introducing Cluster Authorization Using RBAC, Audit Logs, and BYOK for Enterprise-Grade Security

When it comes to launching your next app with data in motion, few things pose the same risk to going live as meeting requirements for data security and compliance. Doing so is no easy task. Ensuring enterprise-grade security for an Apache Kafka® deployment is a complex effort that can delay apps from getting to market by months and sometimes years. Due to the massive impact of a data breach or any significant operational downtime, there’s simply no cutting corners when it comes to protecting data. Providing this level of security has become a table stakes business and customer expectation. So shouldn’t this same expectation be held true for the cloud services used by you and your team?

With Confluent’s fully managed, cloud-native service for Apache Kafka, we equip teams with the complete set of tools that they need to build and launch their data-in-motion apps faster without bypassing strict security and compliance requirements. In support of our ongoing efforts to empower customers in the most security-conscious and regulated industries, we are excited to announce the general availability of Cluster Role-Based Access Control (RBAC) and Audit Logs within Confluent Cloud, along with other updates across the platform including updates to our BYOK offering.

Before we get into Cluster RBAC and Audit Logs, let’s take a look at the some of the baseline security features of Confluent Cloud:

- Authentication: We provide SAML/SSO so that you can utilize your identity provider to authenticate user logins to Confluent Cloud.

- Authorization: We provide granular access control through service accounts/access control lists (ACLs) so that you can gate application access to critical Kafka resources like topics and consumer groups.

- Data confidentiality: We encrypt all data at rest by default. Also, all the network traffic to clients (data in transit) is encrypted with TLS 1.2 (TLS 1.0 and 1.1 are no longer supported and now disabled).

- Private networking: We also provide secure and private networking options, including VPC/VNet peering, AWS/Azure Private Link, and AWS Transit Gateway.

- Compliance and privacy: We provide built-in SOC 1/2/3 and ISO 27001 compliance, GDPR/CCPA readiness, and more.

Now let’s take a look at what’s new:

Control identity and access management with Cluster Role-Based Access Control (RBAC)

When running Kafka at scale within your organization, it’s important to restrict users to only the permissions required for their roles and responsibilities. With ACLs and service accounts, you’ve always been able to gate access to Kafka resources like topics. With Cluster Role-Based Access Control (RBAC), now generally available, you can set role-based permissions per user to gate management access to critical resources like production environments, sensitive clusters, and billing details. New users can be easily onboarded with specific roles as opposed to broad access to all resources. Popular amongst Confluent Platform customers, Cluster RBAC is now offered fully managed within Confluent Cloud.

Cluster RBAC enables you to:

- Easily set user permissions so that developers can rapidly iterate on applications as they build them without compromising the security and stability of production applications

- View org users and their roles

- View resources and who has access to them

The following role assignments are available today in Confluent Cloud across all cluster types:

- The OrganizationAdmin role allows you to access and manage all the resources within the organization

- The EnvironmentAdmin role allows you to access and manage all the resources within the scoped environment

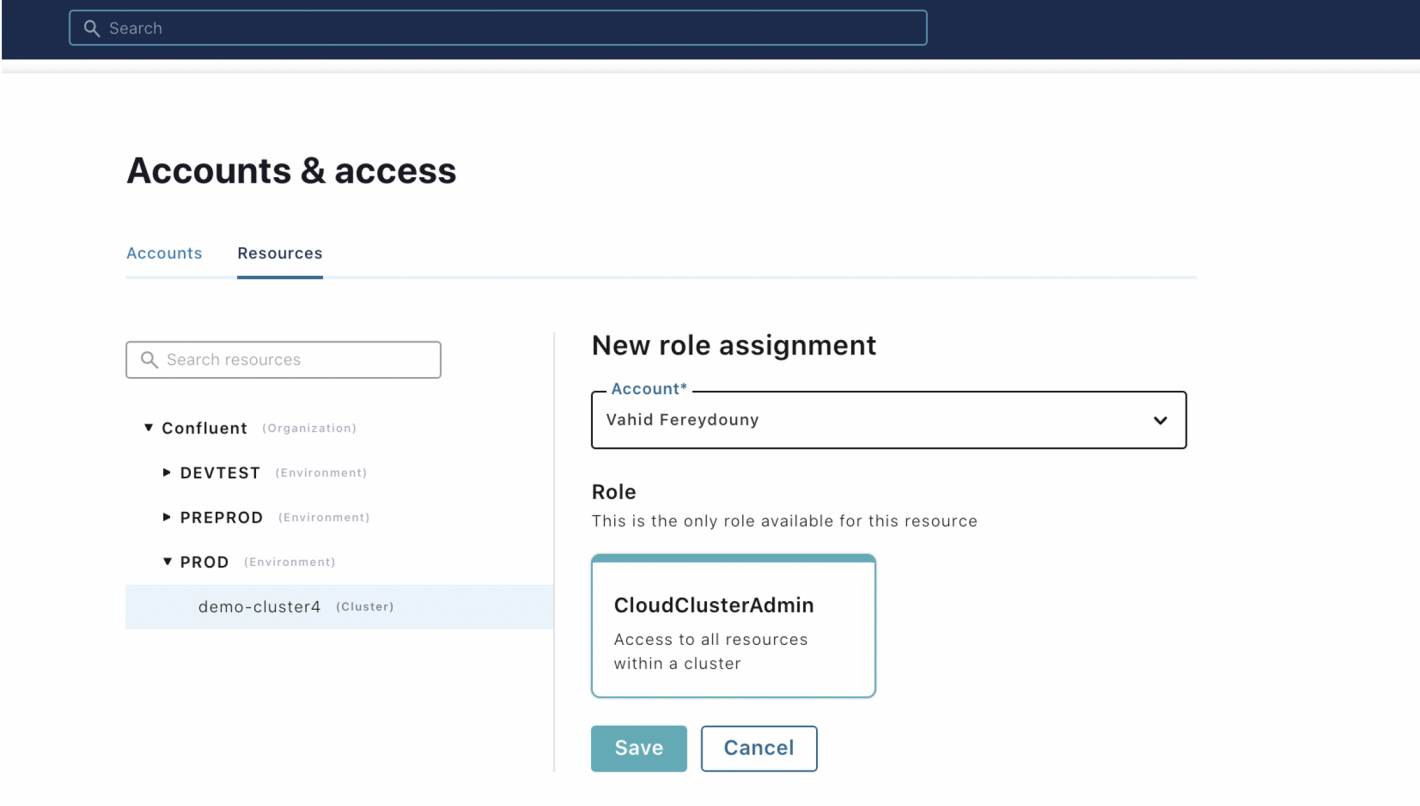

- The CloudClusterAdmin role allows you to access and manage all the resources within the scoped cluster

These roles are assigned to a user for specific resources. For example, if a user is assigned CloudClusterAdmin for Cluster 1 in the TESTDEV environment, then that user can only manage the resources within Cluster 1. That user can’t manage Cluster 2 in the TESTDEV environment or any clusters in the PRODUCTION environment. If another user is assigned EnvironmentAdmin for the TESTDEV environment, then that user can manage both Cluster 1 and Cluster 2 in the TESTDEV environment but not any clusters in the PRODUCTION environment.

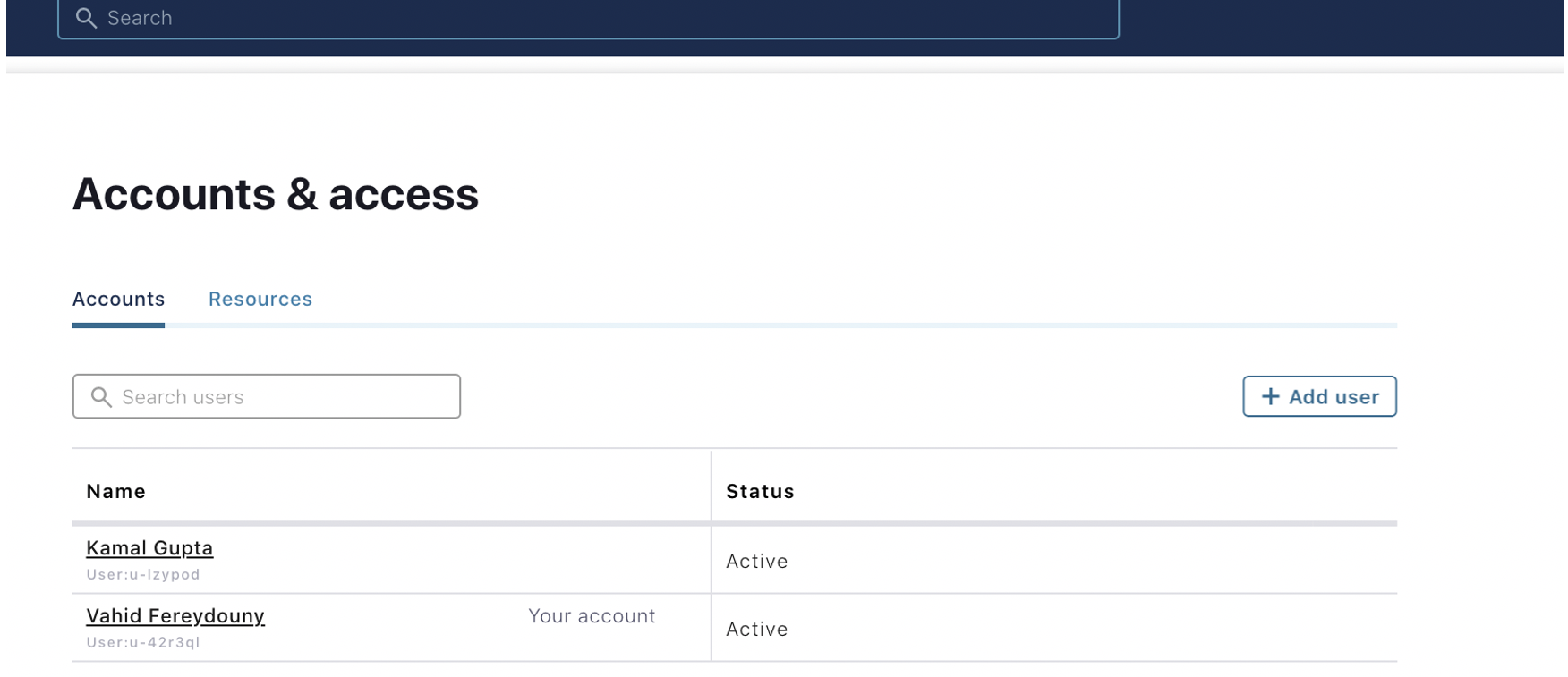

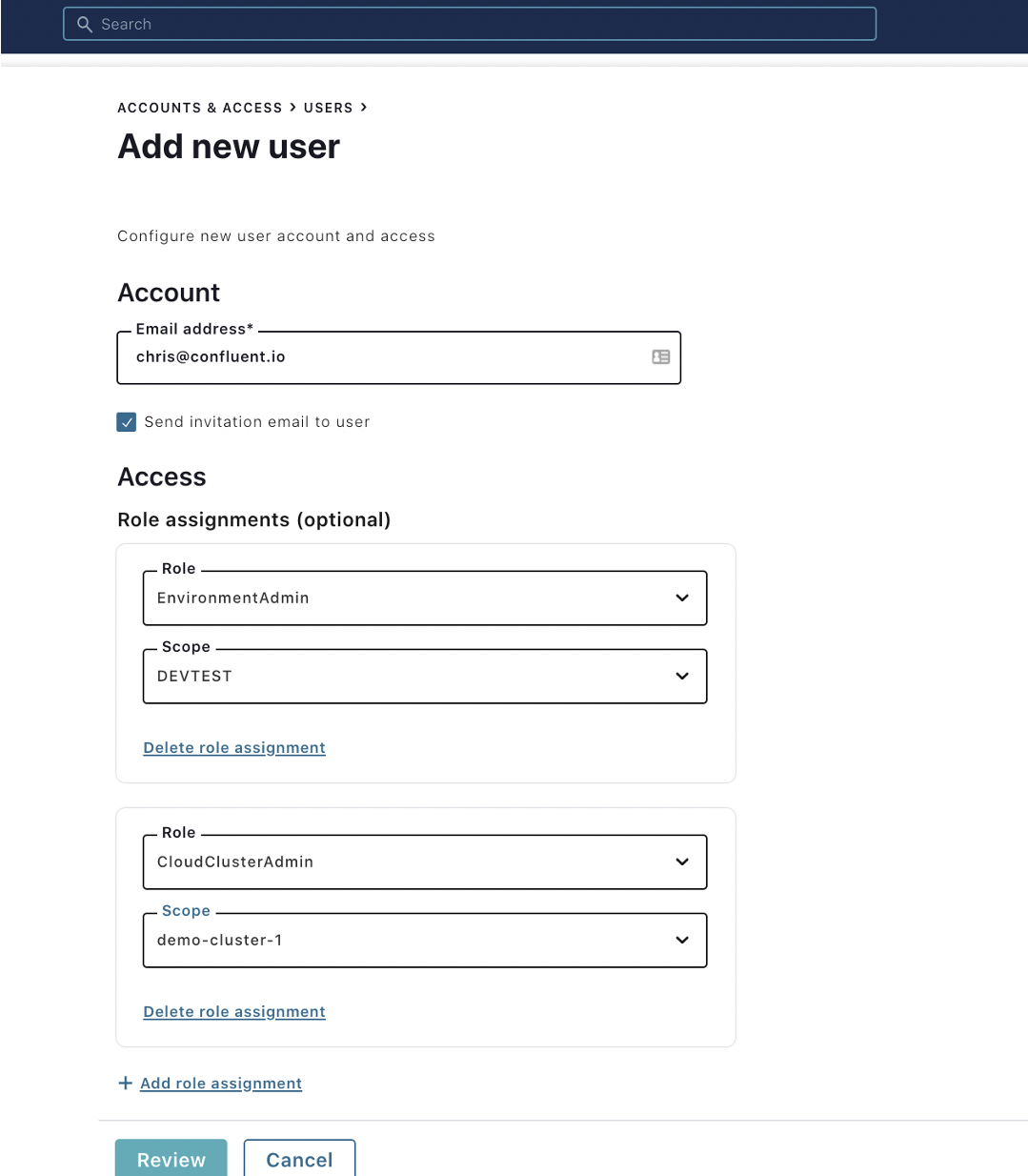

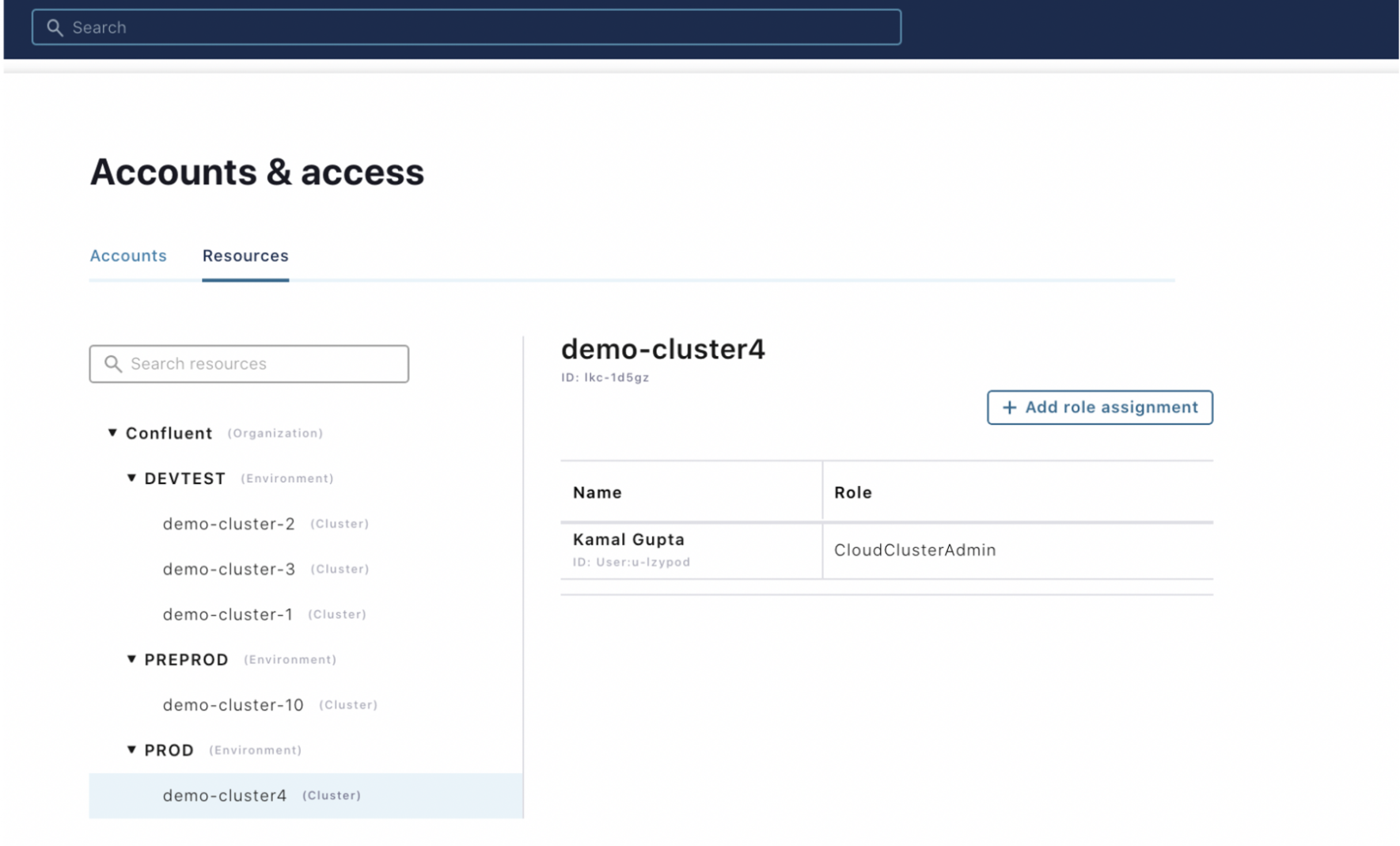

See RBAC in the Confluent user interface:

- View the list of users.

- Invite a new user and grant them different roles. (Notice that a user can have multiple roles.)

- View the resource hierarchy and view/manage the role bindings for a given resource.

Monitor and audit suspicious account activity with Audit Logs

Once up and running with a large team working within your Kafka deployment, it’s critical to keep a close eye on who is touching your data and what exactly they’re doing with it. Now generally available within Confluent Cloud, Audit Logs provide an easy means of tracking user/application resource access in order to identify potential anomalies and bad actors.

Audit Logs are enabled by default so you don’t have to take any actions to set them up. Events are captured in a specific Kafka topic that you can consume in real time like any other Kafka topic and analyze/audit within your service of choice, such as third-party tools like Splunk or Elastic. Note that Kafka cluster Audit Logs will be available only for Dedicated and Standard clusters.

Here is an example of an Audit Log event showing that a user has made a Kafka CreateTopics API request, resulting in an authorization check:

{

"type": "io.confluent.kafka.server/authorization",

"data": {

"methodName": "kafka.CreateTopics",

"serviceName": "crn://confluent.cloud/kafka=lkc-a1b2c",

"resourceName": "crn://confluent.cloud/kafka=lkc-a1b2c/topic=departures",

"authenticationInfo": {

"principal": "User:123456"

},

"authorizationInfo": {

"granted": false,

"operation": "Create",

"resourceType": "Topic",

"resourceName": "departures",

"patternType": "LITERAL",

"superUserAuthorization": false

},

"request": {

"correlationId": "123",

"clientId": "adminclient-42"

}

},

"id": "fc0f727d-899a-4a22-ad8b-a866871a9d37",

"time": "2021-01-01T12:34:56.789Z",

"datacontenttype": "application/json",

"source": "crn://confluent.cloud/kafka=lkc-a1b2c",

"subject": "crn://confluent.cloud/kafka=lkc-a2b2c",

"specversion": "1.0"

}

Ensure data confidentiality and restrict unwanted access with Bring Your Own Key (BYOK) encryption

Fundamental to securing your cloud Kafka deployment is data confidentiality, both while at rest and while in transit. As mentioned previously, we encrypt all data at rest by default. Also, all the network traffic to clients (data in transit) is encrypted with TLS 1.2.

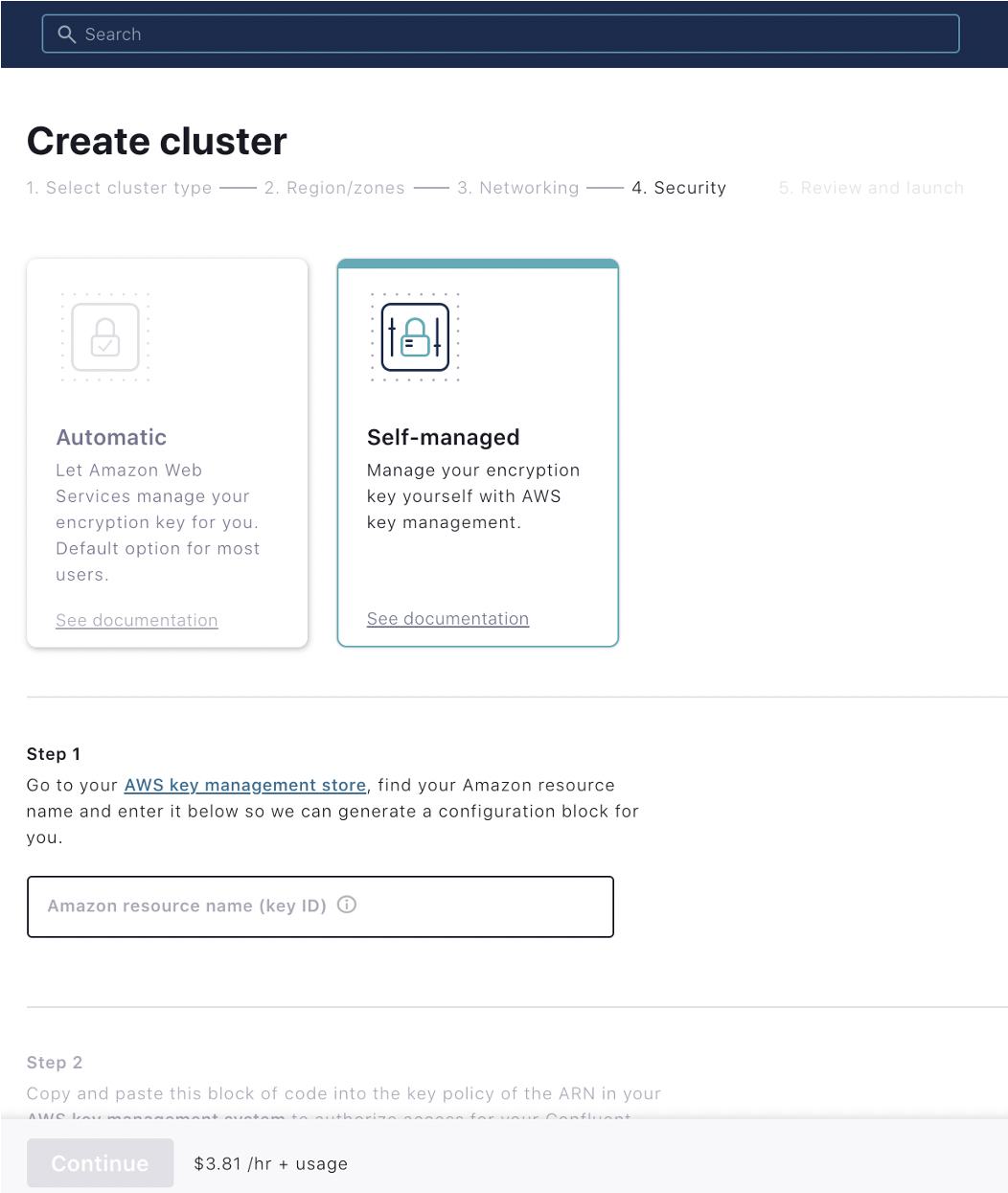

To further strengthen security of data at rest, Confluent supports Bring Your Own Key (BYOK) encryption on Dedicated clusters for AWS (generally available) and Google Cloud (now available in preview)—we are working to bring this feature to Azure in the future. BYOK provides you with the ability to encrypt data at rest with your own custom key and enables more control for access disabling, should the need ever arise.

- Data-at-rest encryption with your own key is a must have and often a blocker of adoption and expansion, especially for those with strong InfoSec requirements.

- BYOK encryption provides a high level of confidence that users have control over their data at rest access, especially when it comes to potential government access to data on drives (subpoena, etc.).

- BYOK encryption is a fundamental security feature showcasing our commitment to security. It is a key factor for driving enterprise security capabilities.

BYOK can be easily enabled at the time when a user provisions a Dedicated cluster with simple, step-by-step guided provisioning within the Confluent Cloud user interface.

Secure data movement with Private Link

Confluent provides a number of private networking options, such as VPC/VNet peering and AWS Transit Gateway. The inherent architecture of private networking allows for an air-gapped network that provides protection against malicious users on the internet. For those requiring even further security, we support both AWS PrivateLink and Azure Private Link. Private Link, which allows for one-way secure connection access from your VPC/VNet to both AWS and Azure and third-party services to provide protection against data exfiltration, is popular for its unique combination of security and simplicity. For these reasons, it is the cloud providers’ recommended best method for secure networking. Self-serve setup is available today on new AWS and Azure Dedicated clusters.

Learn more and get started

We’re excited to bring these new security features to you. Every minute you spend managing Kafka or developing security controls that are not core to your business is time taken away from building impactful customer experiences and products. For more information about compliance and security initiatives in Confluent Cloud, please see the Trust and Security page.

Ready to start using the most secure cloud service for data in motion? Sign up for a free trial of Confluent Cloud and receive $400 to spend within Confluent Cloud during your first 60 days. Additionally, you can use the promo code CL60BLOG for an extra $60 of free Confluent Cloud usage.*

Did you like this blog post? Share it now

Subscribe to the Confluent blog

New with Confluent Platform 7.9: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and More

This blog announces the general availability of Confluent Platform 7.9 and its latest key features: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and more.

Meet the Oracle XStream CDC Source Connector

Confluent's new Oracle XStream CDC Premium Connector delivers enterprise-grade performance with 2-3x throughput improvement over traditional approaches, eliminates costly Oracle GoldenGate licensing requirements, and seamlessly integrates with 120+ connectors...